AI voice agents have advanced from scripted assistants to real-time, multimodal, human-like agents that understand, adapt, and respond with empathy. In 2026, powered by GPT-4.5, Whisper, and next-gen models like GPT-o1 and Gemini 1.5 Pro, voice agents are transforming how enterprises automate customer service, streamline workflows, and deliver hyper-personalized experiences.

This blog walks you through how to build an AI voice agent from scratch, covering essential components, the latest tech stack, deployment options, real-world use cases, and upcoming trends for 2026–2030.

Why AI Voice Agents Are Gaining Popularity in 2026?

- Accessibility First: Voice-driven systems are revolutionizing access for people with disabilities, bridging digital divides with speech-first interfaces.

- Enterprise Efficiency: 67% of enterprises now deploy AI voice agents for Tier 1 support, internal workflow automation, and customer self-service.

- Hardware Everywhere: Smart speakers, connected cars, AR/VR headsets, and IoT devices are embedding voice as the default control interface.

- Smarter AI Models: LLMs like GPT-4.5, Anthropic Claude 3.5, and Google Gemini, combined with OpenAI Whisper + ElevenLabs TTS, are enabling context-aware, multilingual, and emotionally intelligent conversations.

Read More: How to Build an AI Agent in 2026: A Strategic Guide for CTOs and Tech Leaders

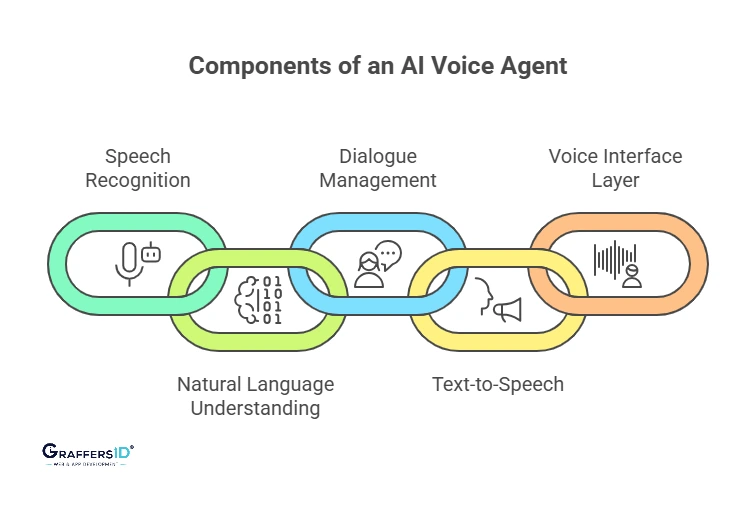

Key Components of an AI Voice Agent in 2026

To build a functional and intelligent voice agent, you need to combine several technologies. Here are the core components:

1. Speech Recognition (ASR)

Automatic Speech Recognition converts spoken language into text.

Top Automatic Speech Recognition (ASR) tools in 2026

- Whisper (OpenAI): An open-source, multilingual, and high-accuracy ASR.

- Google Speech-to-Text: Batch recognition and real-time streaming.

- NVIDIA Riva: Optimized for on-device ASR with low latency.

2. Natural Language Understanding (NLU)

NLU analyzes text transcriptions to determine user intent and extract relevant objects.

Popular tools:

- OpenAI (GPT-4.5 or 5): Prompt chaining and strong contextual awareness.

- Cohere RAG APIs: An LLM-based NLU optimized for business uses.

- Rasa NLU: Adaptable and open-source for intent-based systems.

3. Dialogue Management

Manages logic and conversation flow. You can use:

- LangChain & LlamaIndex: Orchestrate LLM pipelines with memory.

- Hybrid Rule + LLM: For balance of flexibility and compliance.

4. Text-to-Speech (TTS)

Converts AI-generated text back into human-like speech.

Top Text-to-Speech (TTS) tools in 2026

- ElevenLabs: Hyper-realistic voice cloning.

- OpenAI Realtime API (2026): Ultra-low latency TTS for conversations.

- Microsoft Azure TTS: Customizable voices and intonations.

5. Voice Interface Layer

Delivers the final output through devices or apps.

Integration options:

- Twilio / WebRTC: For phone-based voice bots.

- Voiceflow / Alan AI: Low-code builders for apps and web.

- Custom SDKs: For embedded systems in IoT, automotive, or hardware devices.

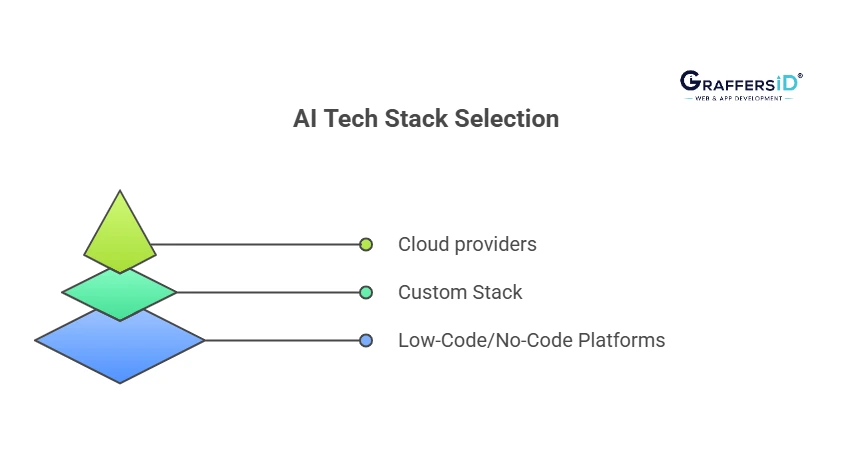

Choosing the Right Tech Stack for Building an AI Voice Agent in 2026

You can select from the following options based on your resources, use case, and scale:

1. Low-Code/No-Code Platforms

- Voiceflow: A low-code platform that allows teams to easily design, prototype, and implement conversational voice interfaces without deep technical expertise.

- Alan AI: Allows developers to easily integrate voice capabilities into web and mobile applications without much coding, making it suitable for rapid deployment.

2. Custom Stack for Enterprises

For businesses that require advanced functionality, flexibility, and control:

- ASR: For open-source, multilingual transcription, use Whisper; for scalable, cloud-native, high-availability speech processing, use Google Cloud Speech-to-Text.

- NLU: Use Cohere for highly-trained enterprise-level embeddings and quicker reaction times, or GPT-4 Turbo for general conversational understanding.

- Dialogue Management: With LangChain, you can organize context-aware interactions, manage memory, and customize fallback logic and API triggers using dynamic pipelines.

- TTS: For extremely realistic voices with emotion and pitch control, use ElevenLabs; for enterprise integration and multilingual capabilities, use Azure TTS.

- Deployment: Use embedded SDKs for IoT or on-device apps with limited connectivity, AWS or Azure for scalable hosting, and Twilio for voice calls and IVR systems.

3. Cloud Providers Offering Complete Suites

Choose these if you want full assistance and a quicker time to market:

- Google Dialogflow CX: A comprehensive platform from Google that offers multi-channel deployment features, dialogue management, and advanced NLU. Before you begin, have a clear idea of your goals. AI voice agents should be built with a specific goal in mind.

- AWS Lex: Amazon’s voice AI suite, which includes enterprise-grade chatbot and speech features, along with built-in integration with AWS services.

- Microsoft’s Azure Bot Framework: A platform for building, testing, and deploying chat and voice agents with deep integration into the Azure ecosystem.

How AI Voice Agents Work: 2026 Architecture

Here’s a simplified architecture of how voice agents function:

User (Voice Input)

↓

ASR (Speech-to-Text)

↓

NLU (Intent Recognition)

↓

LLM/Dialog Engine (Response Generation)

↓

TTS (Text-to-Speech)

↓

User (Voice Output)

Additional Layers:

- Memory: Use vector databases like Pinecone or Weaviate for contextual continuity.

- APIs: To retrieve real-time data or update documents.

- Fallback: Respond to mistakes or invalid inquiries, or refer to a human.

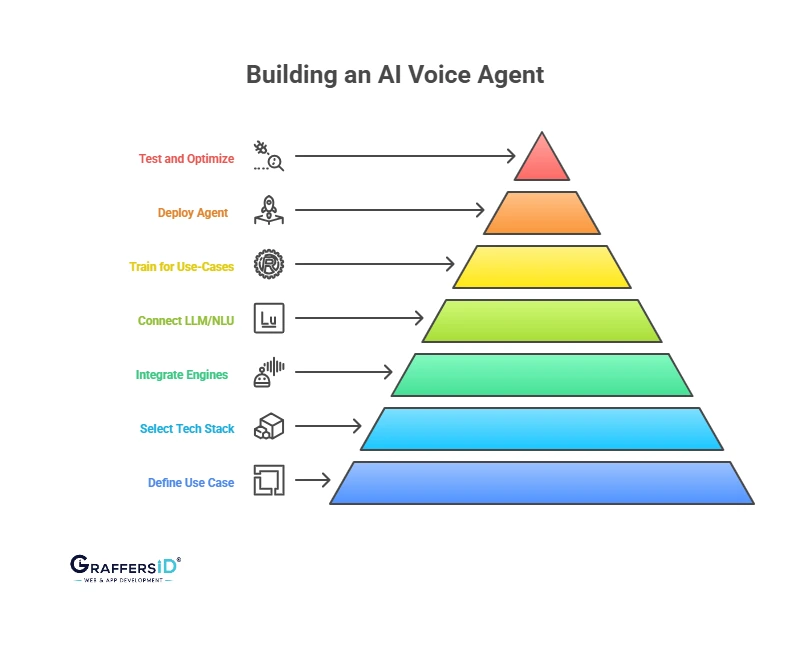

How to Build an AI Voice Agent in 2026: Step-by-Step Guide

Step 1: Define Your Use Case

- Before you begin, have a clear idea of your goals. AI voice agents should be built with a specific goal in mind.

- The desired use case has an impact on everything, from the type of data required for training to the tone and structure of the conversational flow.

- A well-defined objective ensures that you build an effective solution that offers genuine value to end users.

Step 2: Choose Voice Tech Stack

- Selecting the appropriate technological stack is important to your AI voice agent’s success. Consider your team’s technical skills; if you don’t have much AI experience, low-code platforms like Voiceflow or Alan AI can help you get started quickly.

- Assess your budget: open-source tools like Whisper or Rasa can be cost-effective but may require more configuration.

- The deployment platform is equally important. For instance, if you’re building a mobile app, you might need lightweight SDKs and cross-platform support. Meanwhile, a voice bot for call centers would demand telephony integration via Twilio or similar tools.

- Consider your language requirements. If you want to reach users from different regions, choose solutions that offer multilingual transcription and synthesis.

- A balanced tech stack ensures long-term sustainability, scalability, and performance.

Step 3: Integrate ASR & TTS

- After your use case and stack are decided, integrate the Text-to-Speech (TTS) and Automatic Speech Recognition (ASR) components.

- Select an engine that offers high accuracy because ASR translates spoken input to text, particularly in noisy situations or when handling regional accents. OpenAI’s Whisper works perfectly in real-world situations and is great for multilingual use.

- For TTS, the objective is to make the response sound natural and interesting. ElevenLabs and others offer speech synthesis with highly human-sounding emotional nuance. If your product is intended for a global audience, choose TTS tools with multilingual and multidialect support.

- Faster response time really improves the user experience, so make sure these engines satisfy your latency requirements.

- When integrating, compatibility with your hosting environment, whether cloud-based or on-device, must also be considered.

Step 4: Connect LLM/NLU

- This step involves training your speech agent to understand the user and respond intelligently.

- Once the voice has been converted to text, user inquiries can be interpreted by Natural Language Understanding (NLU) engines or Large Language Models (LLMs).

- For this, GPT-4 Turbo from OpenAI or Cohere’s APIs are great options because they can easily handle complex language, comprehend context, and handle follow-up queries.

- Open-source solutions such as Rasa can be tailored for specific intents and entity recognition, making them ideal for highly regulated businesses or those requiring granular control.

- Once LLMs are integrated, your agent may have more natural, human-like conversations instead of just responding with pre-written responses.

- Additionally, to improve accuracy and relevance, you can refine these models on domain-specific data or use prompt engineering.

Step 5: Train for Domain-Specific Needs

- Generic models are powerful, but personalization is key to success. Training your AI voice agent on real-world conversations helps improve its effectiveness.

- Start by collecting interaction logs, support transcripts, or simulated dialogues that reflect your target scenarios.

- Annotate this data to label intents (like “book appointment,” “check balance,” etc.) and entities (such as date, time, location).

- For LLMs, crafting tailored prompts or fine-tuning with your domain-specific vocabulary ensures the output is aligned with your business tone.

- Test the agent with edge cases and varied accents to gauge robustness.

- Continuous training based on feedback and new data enables your voice agent to evolve over time and handle increasingly complex interactions.

Step 6: Deploy the Voice Agent Across Channels

- Now that your voice agent has been integrated and trained, it’s time to launch.

- Your target platform should be compatible with your deployment approach. If you’re building a mobile experience, integrate the agent with iOS or Android SDKs that support real-time audio processing.

- Twilio, Vonage, or Kaleyra are some of the tools that help connect your voice agent with phone infrastructure for telephony systems like IVR.

- WebRTC or integrations based on JavaScript can facilitate smooth browser-based interactions on the web.

- Edge deployment with improved ASR/NLU models ensures low latency and offline availability for automotive applications or IoT devices.

- When deploying, make sure your infrastructure can handle unexpected user behavior or service outages using fallback methods, safe data processing, and auto-scaling.

Step 7: Test and Optimize

- For an AI voice agent to be successful, development must continue after deployment.

- Monitor latency metrics to make sure the response time is still ideal in practical situations.

- Track accuracy by analyzing if the NLU interprets the intent correctly or not, and how frequently the ASR accurately transcribes speech.

- Measure drop-off rates to identify where users abandon conversations and investigate if the cause is unclear prompts, poor UX, or errors.

- Implement strong error handling to handle misrecognition efficiently; use fallback responses, reprompts, or confirmations wherever required.

- A/B test various voices or dialogue flows to see which ones work best.

- Retrain your models, increase intent coverage, and boost customer satisfaction by constantly collecting user feedback.

AI Voice Agent Security Best Practices

- End-to-End Encryption: Secure all voice data during transmission using protocols like TLS to prevent interception.

- Strong Authentication: Use multi-factor authentication for admin access and combine voice biometrics with secondary verification for users.

- Data Minimization & Anonymization: Collect only necessary data and anonymize it to protect user identity.

- Secure Storage: Encrypt data at rest using standards like AES-256 and choose compliant cloud providers.

- Regular Audits: Conduct frequent security reviews and penetration tests to spot vulnerabilities.

AI Voice Agent Security and Compliance Checklist (2026)

- User Consent: Clearly inform users about data usage and get explicit consent.

- Data Rights: Enable users to access and delete their data per regulations.

- Retention Policies: Limit how long voice data is stored to minimize risks.

Read More: AI Voice Generators in 2026: How Enterprises Are Using Text-to-Speech to Scale Communication

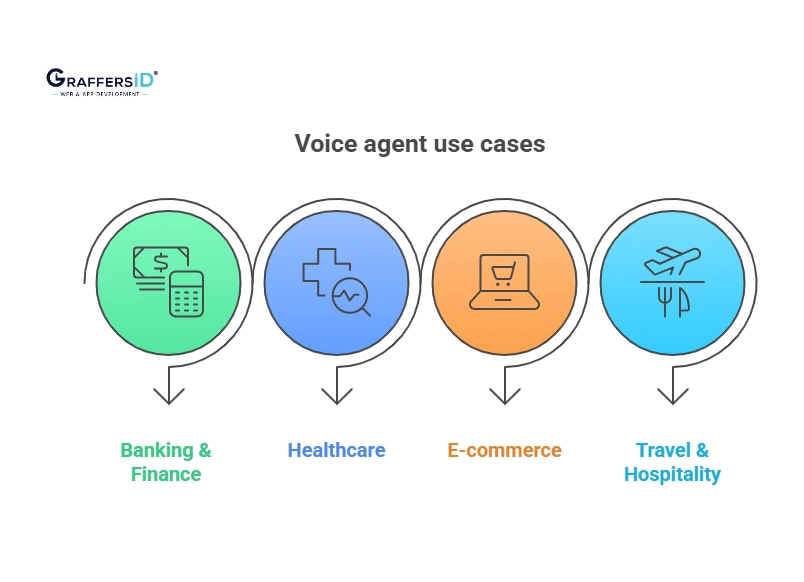

Real-World Use Cases of Voice Agents in 2026

1. Banking & Finance

AI voice agents allow customers to check balances, transfer funds, and pay bills through voice commands, reducing wait times and human intervention. With voice biometrics for authentication and personalized financial advice, banks enhance both security and user experience.

2. Healthcare

AI voice agents help automate healthcare services by scheduling appointments, sending medication reminders, and assisting with symptom checks. In telemedicine, they transcribe consultations and provide instant access to patient records, improving efficiency and accessibility.

3. E-commerce

E-commerce platforms use voice agents for hands-free shopping, letting customers search, order, and track products via voice. They also deliver personalized recommendations and streamline support for returns and FAQs, boosting customer satisfaction.

4. Travel & Hospitality

Travelers use voice agents to book flights, hotels, and rentals, get real-time updates, and manage itineraries. In hotels, guests control room settings and request services via voice, improving convenience and service quality.

Future Trends in AI Voice Agents (2026–2030): What’s Next in Voice AI

AI voice agents are evolving rapidly. Here are five key trends expected to shape their future:

- Autonomous Voice Agents: AI voice agents are evolving to independently make decisions and take actions based on user input, context, and predefined goals without human intervention.

- Emotionally Intelligent AI: Advanced voice analysis enables agents to detect user emotions and sentiments in real time, allowing for more empathetic and adaptive responses.

- Voice Cloning & Brand Identity: Brands can now replicate specific voices or tones using AI voice cloning to maintain a consistent and personalized audio identity across all interactions.

- Immersive AR/VR Agents: Voice agents are being embedded into augmented and virtual reality environments to offer immersive, hands-free control and guidance within 3D spaces.

- Hyper-Personalized Conversations: Memory-equipped agents retain user preferences and interaction history over time, enabling deeply personalized and context-aware dialogue experiences.

Conclusion

AI voice agents in 2026 are no longer futuristic, they’re enterprise-ready, revenue-driving, and customer-first. Whether you’re optimizing call centers, scaling e-commerce, or building voice-first IoT products, the right tech stack + strategy can transform your business.

Looking to build a custom AI Voice Agent tailored for your industry?

GraffersID helps startups and enterprises hire AI developers who can build scalable, secure, and high-performing voice applications. With proven expertise in OpenAI, LLMs, and voice interfaces, we bring your vision to life. Contact us now!