AI agents have moved far beyond experimental chatbots. In 2026, businesses expect them to function like long-running digital employees, handling complex tasks, retaining context, learning from past interactions, and continuously improving outcomes.

Yet most AI agents still fail at one critical capability. They forget everything the moment a session ends.

This single limitation is why many AI initiatives stall after proof-of-concept. Without memory, agents repeat questions, lose context, and struggle with multi-step workflows, making them unreliable for real business operations.

AI agent memory is the breakthrough that changes this. It is what separates:

-

One-off, demo-level AI agents

-

From production-ready, enterprise AI systems that scale and learn

For CTOs, product leaders, and executives, memory-based AI agents unlock:

-

Persistent personalization across sessions and users

-

Reliable multi-step automation for long-running workflows

-

Context-aware decision-making that improves over time

-

Lower operational costs through reduced repetition and smarter execution

In this guide, we break down what AI agent memory is, why it has become essential in 2026, and how to build memory-enabled AI agents using modern architectures, tools, and best practices, so your AI systems move from impressive demos to real, scalable business assets.

What is AI Agent Memory and How Does It Work?

AI agent memory refers to an AI agent’s ability to store, retrieve, and reuse information from past interactions so it can make better decisions and take more accurate actions in the future.

Unlike traditional AI systems that respond only to the current prompt, memory-enabled AI agents learn from experience. They remember prior conversations, user preferences, task outcomes, and important contextual details and then apply that knowledge in future interactions.

In simple terms: AI agent memory allows an AI system to remember what happened before and act more intelligently over time.

This capability transforms AI agents from stateless responders into adaptive, long-running systems suitable for real business workflows in 2026.

Read More: How to Build an AI Agent in 2026: A Strategic Guide for CTOs and Tech Leaders

AI Agent Memory vs. Context Window: What’s the Difference?

A common misconception is that AI agent memory is the same as using a larger context window. While both provide additional information to the model, they serve very different purposes.

Key Differences Between Context Window and AI Agent Memory

| Context Window | AI Agent Memory |

|---|---|

| Temporary and session-based | Persistent across sessions |

| Limited by token count | Scales independently of tokens |

| Cleared after each interaction | Retained and reused over time |

| High cost at large sizes | Optimized for long-term efficiency |

A context window only helps the AI understand the current conversation. Once the session ends, everything is lost.

AI agent memory, on the other hand, acts as a persistent cognitive layer. It allows agents to recall relevant past information on demand, without reloading massive prompts or increasing token usage.

Why AI Agent Memory Matters More Than Larger Context Windows in 2026?

As AI agents handle long-running tasks, personalized user experiences, and autonomous workflows, relying solely on larger context windows becomes inefficient and expensive.

Memory-based architectures enable:

-

Smarter, personalized responses over time

-

Consistent behavior across sessions

-

Reduced prompt size and lower operational costs

-

Scalable AI systems that improve with usage

In 2026, true intelligence in AI agents is defined not by how much context they can hold, but by what they can remember, retrieve, and apply effectively.

Read More: What Are Multi-Modal AI Agents? Features, Enterprise Benefits & Use Cases (2026 Guide)

Why AI Agent Memory is Essential in 2026?

AI agents are no longer simple assistants. They are becoming autonomous systems embedded into business operations.

Key Reasons Why Memory Matters in 2026

-

AI agents now run long-lived workflows, not just single chats

-

Enterprises expect continuity and personalization across interactions

-

Repeated context injection increases cost and latency

-

Businesses want learning systems, not static AI tools

In 2026, AI agents are expected to behave like digital employees, and employees rely on memory to perform effectively.

What Happens When AI Agents Don’t Have Memory?

Without memory, AI agents tend to:

-

Repeat the same questions

-

Lose track of goals mid-task

-

Fail at multi-day or multi-step workflows

-

Deliver inconsistent and unreliable outputs

These limitations are a major reason many AI initiatives fail to move beyond pilot stages.

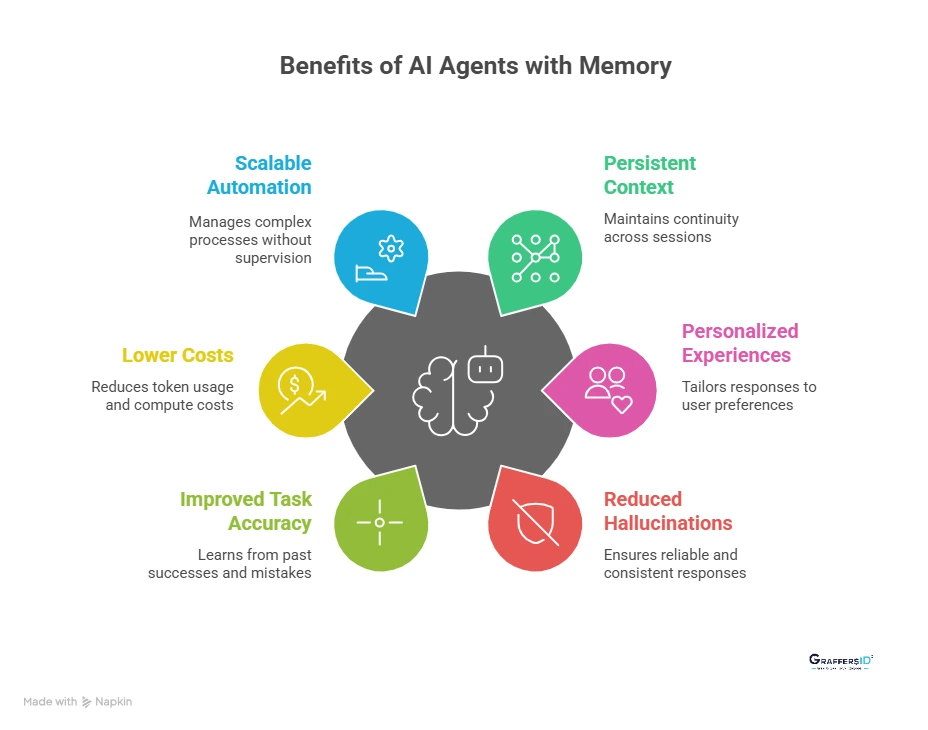

Key Benefits of AI Agents With Memory in 2026

Memory transforms AI agents from reactive, session-based tools into adaptive systems that improve over time. In 2026, this capability is what enables AI agents to operate reliably in real business environments.

1. Persistent Context Across Sessions: AI agents with memory retain important information across interactions, ensuring seamless continuity in conversations, tasks, and workflows. Users no longer need to repeat context, making multi-step processes consistent and reliable for enterprises.

2. Personalized User Experiences: Memory enables agents to remember user preferences, behaviors, and past decisions. Over time, responses become increasingly tailored, providing the level of personalization that modern businesses and customers expect in 2026.

3. Reduced Hallucinations and Inconsistent Responses: By storing verified information and past decisions, memory helps agents avoid inconsistencies and hallucinations. This grounding ensures responses are reliable, which is critical for customer-facing and enterprise-grade applications.

4. Improved Task Accuracy Over Time: Memory allows AI agents to learn from prior successes and mistakes. This learning loop improves task execution, helping agents handle complex or repeatable workflows with higher accuracy.

5. Lower Token Usage and Compute Costs: Agents can retrieve only relevant information from memory instead of reinjecting the full context every time. This reduces token usage and compute costs, making large-scale deployments more efficient and cost-effective.

6. Scalable Automation for Real Business Workflows: Memory-based AI agents can manage multi-step, multi-day processes without constant human supervision. This enables scalable, reliable automation across teams, systems, and business operations.

Types of AI Agent Memory in 2026

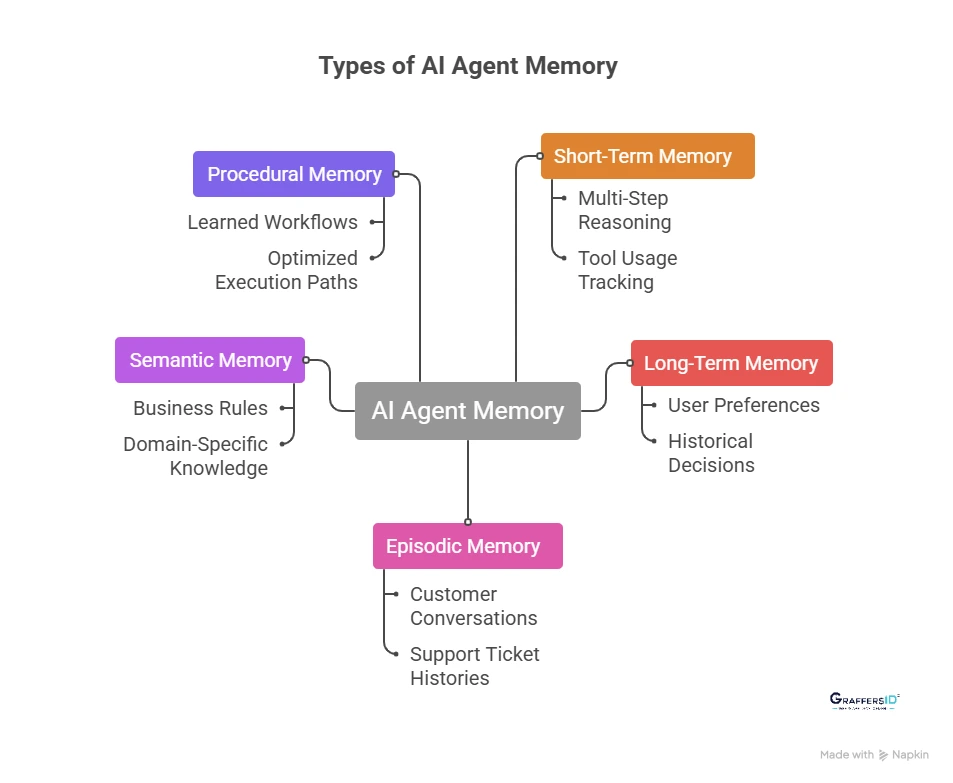

Modern AI agents use multiple memory types, each serving a distinct purpose. Understanding these memory types is essential for building scalable, production-ready AI agents in 2026. Below are the core types of AI agent memory:

1. Short-Term Memory (Working Memory)

Short-term memory stores information that an AI agent needs during an active session. It is temporary and exists only while the agent is performing a task or responding to a user.

This memory type helps the agent stay coherent and focused while reasoning step by step.

Short-term memory is used for:

-

Multi-step reasoning and planning

-

Tracking tool usage and execution flow

-

Holding temporary variables and intermediate results

Common implementations include:

-

Prompt buffers

-

Scratchpads for reasoning chains

-

Execution state and task context

Short-term memory is critical for ensuring that an AI agent can complete complex tasks without losing context mid-execution.

2. Long-Term Memory

Long-term memory allows AI agents to remember information across sessions, making them stateful rather than stateless.

This is the foundation of persistent, personalized AI agents in real-world applications.

Long-term memory stores:

-

User preferences and behavior patterns

-

Historical decisions and outcomes

-

Learned facts from previous interactions

Typically backed by:

-

Vector databases

-

Document-based storage systems

-

Knowledge graphs

Long-term memory enables AI agents to improve over time and deliver consistent, context-aware experiences.

3. Episodic Memory

Episodic memory captures specific past interactions or events that the agent can recall when needed.

It helps AI agents understand what happened before and respond accordingly.

Common examples include:

-

Previous customer conversations

-

Support ticket histories

-

Past project interactions or milestones

Episodic memory is especially important for customer support, sales, and service-based AI agents, where continuity and historical awareness directly impact user satisfaction.

4. Semantic Memory

Semantic memory stores general knowledge and factual information that an AI agent uses to reason and make decisions.

This memory type focuses on what is true, not what happened.

Examples include:

-

Business rules and policies

-

Domain-specific knowledge

-

Product or service information

Semantic memory often overlaps with structured knowledge bases and is commonly integrated with retrieval systems to ensure accuracy and reliability.

5. Procedural Memory

Procedural memory defines how an AI agent performs tasks. It stores learned behaviors, optimized workflows, and preferred action sequences.

This memory type allows agents to get better with repetition.

Procedural memory includes:

-

Learned workflows and task sequences

-

Preferred tool usage patterns

-

Optimized execution paths based on past success

Procedural memory is essential for autonomous agents and workflow automation, enabling continuous improvement without manual reprogramming.

Why Using Multiple Memory Types Matters?

In 2026, the most effective AI agents combine short-term, long-term, episodic, semantic, and procedural memory to deliver:

-

Reliable multi-step automation

-

Personalized user experiences

-

Consistent decision-making

-

Scalable, enterprise-ready AI systems

By designing agents with the right memory mix, businesses can move from basic AI assistants to intelligent, adaptive digital workers.

How AI Agent Memory Works in a Modern AI Agent Architecture?

A modern AI agent architecture in 2026 is no longer built around a single language model. Instead, it is a modular system where each component plays a distinct role in enabling reasoning, action, and learning over time.

A typical production-ready AI agent architecture includes the following core layers:

-

Large Language Model (LLM): Acts as the reasoning engine. The LLM interprets inputs, generates responses, and decides what actions to take based on available context.

-

Planner or Controller: Breaks complex goals into smaller steps, manages task execution order, and determines when tools or memory should be used.

-

Tool Interfaces: Allow the agent to interact with external systems such as APIs, databases, CRMs, internal platforms, or automation tools.

-

Memory Layer: Stores past interactions, decisions, user preferences, and learned behaviors. This layer enables the agent to maintain context across sessions and improve performance over time.

-

Observability and Guardrails: Ensure reliability, safety, and compliance by monitoring agent behavior, logging decisions, applying constraints, and enforcing policies.

Together, these components allow AI agents to move beyond one-off conversations and operate as stateful, long-running systems suitable for real business use cases.

Read More: AI Agent vs. Chatbot: Key Differences, Use Cases & Future of Intelligent CX (2026)

AI Agent Memory vs RAG: What’s the Difference?

A common source of confusion in 2026 is the difference between Retrieval-Augmented Generation (RAG) and AI agent memory. While both provide additional context to AI systems, they serve fundamentally different purposes.

| RAG | AI Agent Memory |

|---|---|

| Knowledge lookup | Experience retention |

| Stateless | Stateful |

| Read-only | Read & write |

| Improves accuracy | Improves intelligence |

In real-world deployments, the most effective AI agents in 2026 use both RAG and memory together.

-

RAG ensures the agent has access to accurate, up-to-date information.

-

Memory ensures the agent learns from past interactions and behaves consistently.

This hybrid approach allows AI agents to:

-

Answer questions accurately

-

Maintain long-term context

-

Execute complex, multi-step workflows

-

Deliver personalized, reliable experiences at scale

AI Agent Memory Storage and Retrieval Methods in 2026

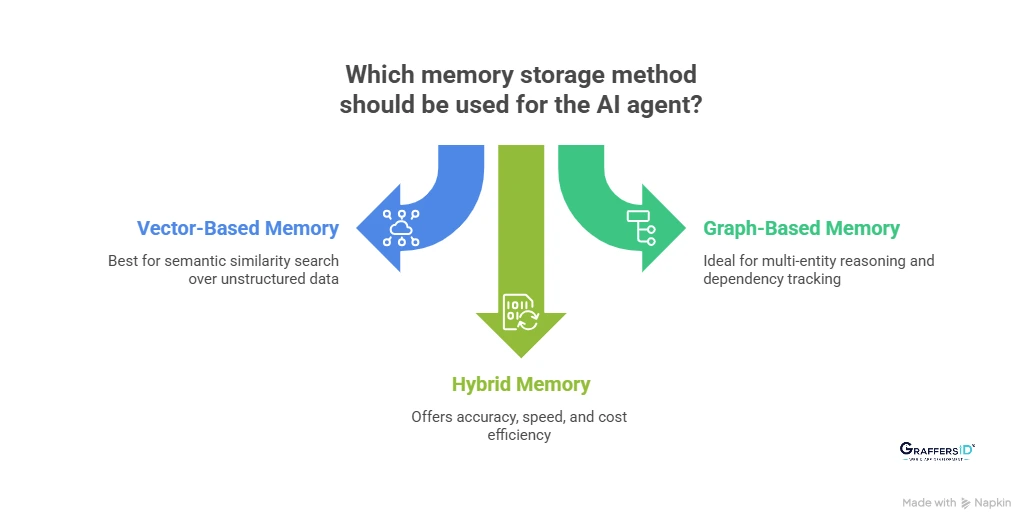

1. Vector-Based Memory Stores

Vector memory is the most widely used AI agent memory approach in 2026. It stores past interactions as embeddings, enabling semantic similarity search over unstructured data. Tools like FAISS, Pinecone, and Weaviate help agents retrieve relevant memories based on meaning rather than keywords.

2. Graph-Based Memory Systems

Graph-based memory is used when relationships between entities matter more than raw similarity. It enables multi-entity reasoning, dependency tracking, and complex workflow understanding, making it ideal for enterprise systems and knowledge-intensive AI agents.

3. Hybrid Memory Systems

Hybrid memory systems combine vector search, structured metadata, and rule-based logic into a single architecture. This approach offers better accuracy, faster retrieval, and cost efficiency, which is why it has become the dominant memory strategy for production AI agents in 2026.

How to Build a Memory-Based AI Agent? Step-by-Step Process in 2026

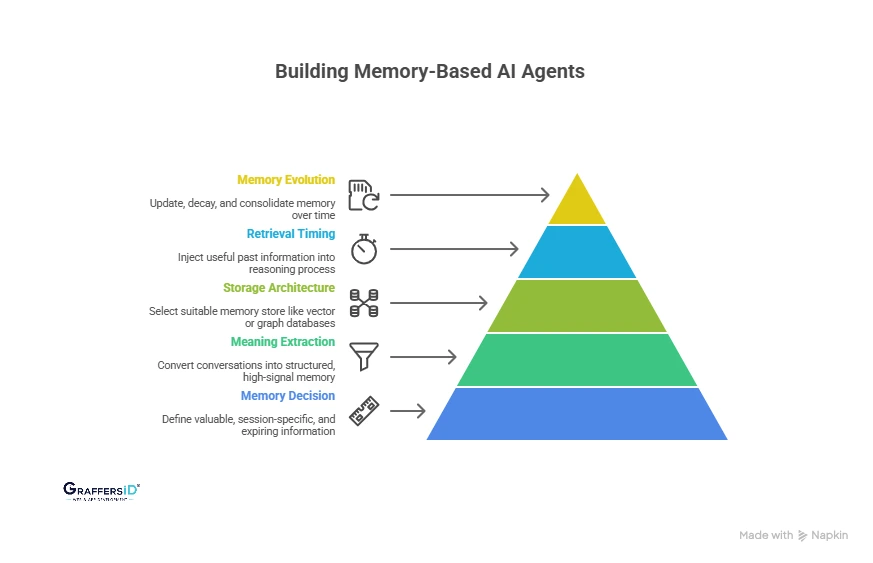

Step 1: Decide What the AI Agent Should Remember

Effective AI agents do not store everything. Define what information delivers long-term value, what is only useful within a session, and what should automatically expire to avoid memory overload.

Step 2: Extract Meaningful Memory From Interactions

AI agents should convert raw conversations into structured memory using summarization, entity recognition, and relevance scoring. Only high-signal information should be stored to keep the memory accurate and useful.

Step 3: Choose the Right Memory Storage Architecture

Select a memory store that fits your use case, such as vector databases for semantic recall, graph databases for relationships, or hybrid systems for production-scale agents. Enrich memory with metadata to support precise retrieval.

Step 4: Retrieve Memory at the Right Moment

Memory retrieval should be context-aware, relevance-ranked, and token-efficient. The goal is to inject only the most useful past information into the agent’s reasoning process, not overwhelm the prompt.

Step 5: Continuously Update, Decay, and Forget Memory

AI agent memory must evolve over time. Use decay, confidence weighting, and consolidation to keep memory fresh, accurate, and aligned with current behavior; forgetting is essential for long-term reliability.

Tools and Frameworks for AI Agent Memory in 2026

Popular choices include:

-

Agent frameworks with memory abstractions

-

Vector databases

-

Custom memory orchestration layers

The trend in 2026 is memory-as-a-service, abstracted from the agent logic.

Real-World Use Cases of Memory-Based AI Agents

- Customer Support AI Agents: Memory-enabled support agents retain customer history, past issues, and preferences across sessions. This eliminates repeated explanations, speeds up resolution times, and consistently improves CSAT and customer trust.

- Personal AI Assistants: Personal AI assistants use memory to learn user habits, priorities, and workflows over time. This allows them to deliver increasingly personalized recommendations and measurable productivity improvements.

- Developer and Engineering AI Agents: Engineering-focused AI agents remember project context, codebase structure, and past decisions. This helps maintain coding standards, reduce context loss, and ensure architectural consistency across long-term projects.

- Autonomous Business Workflow AI Agents: Memory-driven workflow agents manage multi-day or multi-week tasks without losing progress. They intelligently resume work, adapt to changes, and significantly reduce the need for manual oversight.

Conclusion: Why AI Agent Memory is the Core of Intelligent Automation?

In 2026, AI agents without memory are no longer competitive; they are incomplete systems. Without the ability to remember, learn, and adapt, even the most advanced language models fail to deliver consistent business value.

AI agent memory is what enables intelligent automation at scale.

It provides the foundation for:

-

Continuity, by maintaining context across sessions and workflows

-

Learning, by improving decisions based on past interactions

-

Trust, through consistent, predictable behavior

-

Scalability, by supporting long-running, autonomous operations

Whether you are building customer support agents, internal AI copilots, or autonomous business workflows, memory is the layer that transforms AI from reactive responses into reliable, outcome-driven systems.

Build Memory-Driven AI Agents with GraffersID

At GraffersID, we help businesses design and deploy production-ready AI agents that go beyond demos and scale in real-world environments. Our expertise includes:

-

Persistent and secure AI agent memory architectures

-

Custom AI automation solutions tailored to business workflows

-

Scalable web and mobile app development

If you’re planning to build stateful, intelligent AI agents in 2026, our team can help you move from concept to deployment faster, smarter, and with long-term scalability in mind.

Contact GraffersID for building custom AI agents.