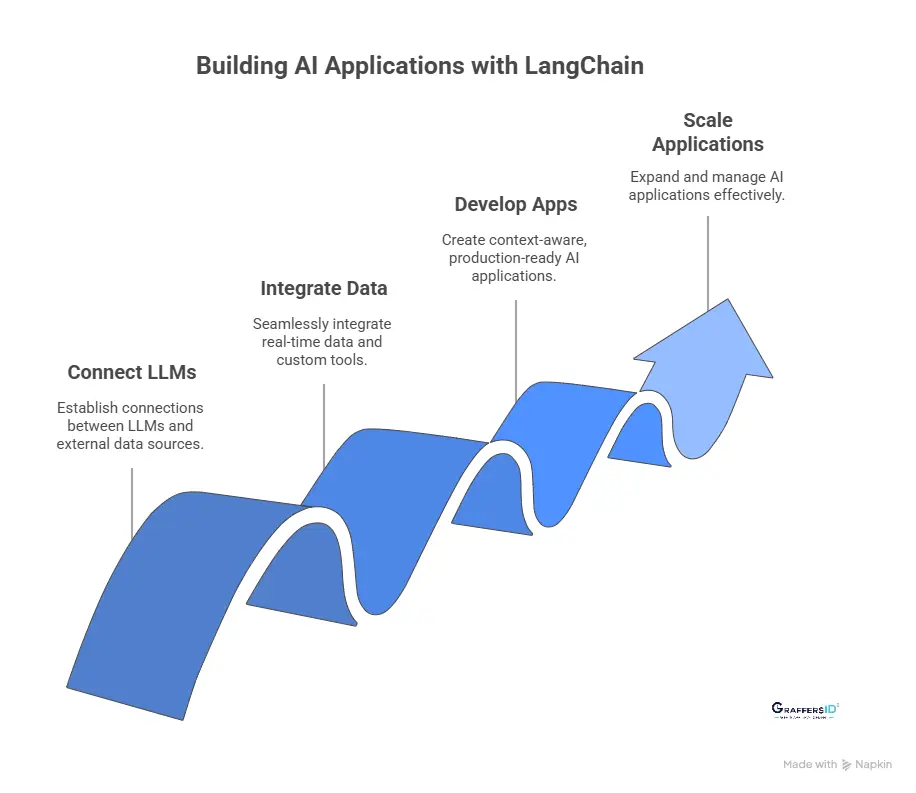

AI development in 2026 is no longer about just experimenting with large language models; it’s about building real, scalable applications that solve business problems fast. At the center of this transformation is LangChain, the open-source framework that’s become a game-changer for developers and enterprises worldwide.

With 51,000+ GitHub stars and 1M+ monthly downloads, LangChain is one of the fastest-growing AI frameworks today. Its power lies in making LLMs more useful by connecting them with databases, APIs, tools, and enterprise workflows, turning raw AI capability into production-ready solutions. The result? Teams are building AI apps 10x faster, from intelligent chatbots to enterprise copilots and retrieval-augmented generation (RAG) systems.

In this guide, we’ll dive into LangChain’s 2026 architecture, core building blocks, agents, memory systems, RAG workflows, LangGraph orchestration, and real-world use cases, giving you a complete playbook on why it continues to dominate AI development.

What is LangChain in AI Development?

LangChain is an open-source framework that helps developers build, scale, and manage applications powered by large language models (LLMs). It provides an easy way to connect LLMs with databases, APIs, and real-time workflows, making AI applications smarter and more useful in real-world scenarios.

- Available in: Python and JavaScript

- Core role: Acts as a bridge between LLMs and external tools/data sources.

- Founded: October 2022 by Harrison Chase

- Growth milestone: By mid-2023, it became GitHub’s fastest-growing project.

Traditional LLMs have limitations; they don’t have real-time data access and often struggle with domain-specific knowledge. LangChain solves these challenges by integrating LLMs with live data sources, custom tools, and external processing systems, enabling developers to create context-aware, production-ready AI apps that go beyond static responses.

Read More: Advancements in Natural Language Processing (NLP) in 2026: Latest Trends & Tools

What is LangChain Architecture and How Does It Work?

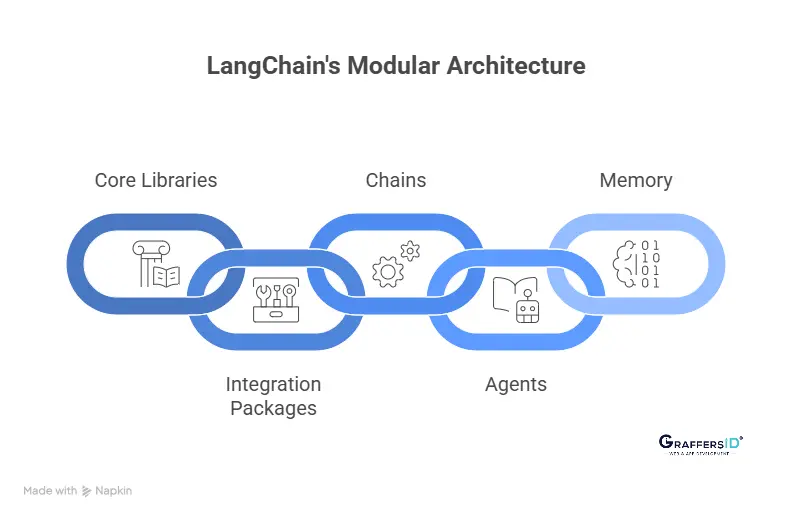

LangChain’s architecture is modular and chain-based, which means developers can easily plug in only the components they need. This flexibility makes it one of the most popular frameworks for building LLM-powered applications in 2026.

Core Libraries in LangChain

- langchain-core: Provides the base abstractions for chat and language models.

- Integration Packages: Lightweight add-ons for external APIs, tools, and services.

- langchain: Contains chains, agents, and retrieval strategies.

- langchain-community: Community-driven integrations that extend functionality.

- langgraph: An orchestration framework for production-ready AI applications.

Chains

Chains are sequences of steps where the output of one component feeds into the next. They break down complex workflows into smaller, manageable tasks, helping teams build reliable AI pipelines.

Read More: 5 Best AI Frameworks and Libraries in 2026 Trusted by Leading Tech Companies

Agents

Agents act as autonomous decision-makers within LangChain. They can:

- Perform multi-step reasoning

- Retrieve data when needed

- Call external tools dynamically

- Maintain conversation context for more natural interactions

Memory

LangChain’s memory modules allow AI systems to retain context across interactions, making conversations more human-like and useful for long-running applications.

LangChain vs. Traditional LLM Integration: Key Comparison 2026

| Feature | Traditional LLM Integration | LangChain Integration |

|---|---|---|

| Model Switching | Requires complex, manual code updates | Switch models easily (OpenAI, Anthropic, Hugging Face, Azure) |

| Development Speed | Slower, more boilerplate code | Faster with reusable components and templates |

| Workflow Flexibility | Limited customization | Highly flexible with Chains, Agents, and Tools |

| Memory | Basic or non-existent | Advanced options like Buffer, Summary, and Hybrid Memory |

| Monitoring & Analytics | Strong with MLflow, Airflow | Basic today but improving rapidly |

LangChain doesn’t replace ML or analytics platforms; it complements them. Many enterprises in 2026 use LangChain for dynamic AI tasks while relying on Airflow or MLflow for monitoring and analytics.

Key Building Blocks of LangChain (2026)

To understand how LangChain powers modern AI applications, you need to know its core components. These building blocks make it easier for developers to connect large language models (LLMs) with real-world data, workflows, and applications.

1. LLM Interface and Model Abstraction

LangChain provides a simple interface so developers don’t have to write model-specific code. Instead of worrying about APIs or response parsing, you can plug in any LLM and start building.

Example:

from langchain_openai import ChatOpenAI

model = ChatOpenAI(model=”gpt-3.5-turbo”)

response = model.predict(“Explain LangChain in 2 lines.”)

- Handles API calls, input formatting, and parsing outputs

- Enables model switching with one line of code

- Supports tuning parameters like temperature for creativity

2. Prompt Templates

Prompt engineering becomes easier with LangChain’s Prompt Templates, which help you create structured, reusable prompts.

from langchain_core.prompts import PromptTemplate

template = “Give me 3 AI trends for {year}.”

prompt_template = PromptTemplate.from_template(template)

print(prompt_template.format(year=”2026″))

- Keeps prompts modular & reusable

- Supports variables, Jinja2, and f-string formats

- Improves consistency across AI workflows

3. Chains

Chains allow developers to build multi-step AI workflows by linking prompts and models together. Instead of a single query-response interaction, you can create complex pipelines.

from langchain.chains import LLMChain

chain_example = LLMChain(llm=model, prompt=prompt_template)

result = chain_example.run(“input”)

- Automates multi-step reasoning

- Supports SimpleSequentialChain for running multiple steps in sequence

- Makes AI workflows scalable for real-world applications

LangChain Agents: How They Make AI Tools Smarter in 2026

LangChain Agents give large language models (LLMs) the ability to decide which tools to use, when to act, and how to complete a task. Instead of being limited to static prompts, agents let LLMs dynamically reason, choose an action, and deliver better results.

Agent Strategies in LangChain

| Strategy | Strength | Weakness | Best Use |

| ReAct | Adaptive, flexible | Token-heavy, slower | Conversational AI, document Q&A |

| ReWOO | Faster, token-efficient | Less adaptive | Batch tasks, compliance checks |

-

ReAct works in a loop, reasoning, acting, and observing results. This makes it great for interactive use cases, but slower due to higher token usage.

-

ReWOO plans the entire workflow upfront, making it faster and cheaper but less flexible when conditions change.

Tool Calling (with bind_tools())

One of the most powerful features of LangChain agents is tool calling, where LLMs can directly use external functions or APIs.

Example:

from langchain.tools import tool

@tool

def multiply(a: int, b: int) -> int:

return a * b

tools = [multiply]

llm_with_tools = llm.bind_tools(tools)

result = llm_with_tools.invoke(“What is 2 * 3?”)

In this example, the LLM decides to use the multiply tool automatically to answer the query.

Memory Management in LangChain

LangChain offers multiple memory types, each with specific strengths, limitations, and ideal use cases:

| Memory Type | Strength | Weakness | Best Use |

| ConversationBufferMemory | Retains full conversation history for maximum context | Consumes tokens quickly, not scalable | Best for short chats and quick prototypes |

| ConversationSummaryMemory | Summarizes conversations for token efficiency | May lose fine-grained details | Works well for long conversations and chatbots |

| Summary Buffer Memory | Offers a balance between full context and token usage | Requires more setup and tuning | Ideal for production AI apps that need context without high costs |

Persistence Options in LangChain Memory (2026)

-

FileSystemCheckpointer: Lightweight option for local projects.

-

SQLite / JSON storage: Structured and portable storage for scaling apps.

-

LangGraph persistence: Advanced memory persistence designed for complex, production-grade AI workflows.

How to Use LangChain Integrations and Tools in 2026

Some popular LangChain integrations include:

- Wolfram Alpha: For solving mathematical and scientific queries.

- Google Search: For retrieving the latest real-time information.

- OpenWeatherMap: For accessing live weather data.

- Custom Tools: Using bind_tools() to create tailored integrations.

Example: Google Search Integration in LangChain (2026)

from langchain_google_community import GoogleSearchAPIWrapper

search = GoogleSearchAPIWrapper()

print(search.run(“Latest AI trends 2026”))

With just a few lines of code, LangChain allows you to connect an LLM to Google Search, making your AI app smarter with the most up-to-date information.

How LangChain Uses RAG (Retrieval-Augmented Generation) to Overcome LLM Limitations?

Large Language Models (LLMs) are powerful, but they struggle with outdated or missing knowledge. Retrieval-Augmented Generation (RAG) solves this by combining LLMs with vector databases and retrievers to deliver more accurate, context-aware answers.

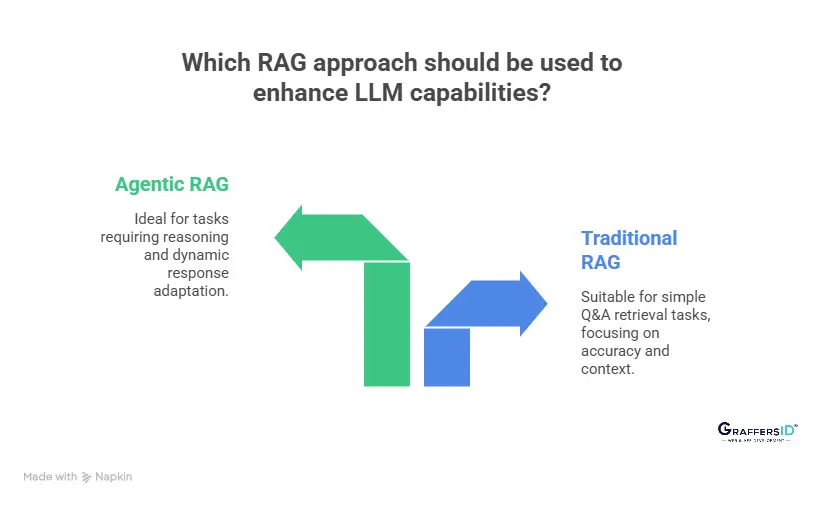

- Traditional RAG: Focuses on simple Q&A retrieval.

- Agentic RAG: Goes a step further, using agents that can reason, refine queries, and adapt responses dynamically.

LangChain supports:

- Vector Stores: Pinecone, Weaviate, FAISS, Milvus

- TextSplitters: For document chunking

- Hybrid Search: Combining keyword search with vector similarity search for higher accuracy

Read More: How to Build a Successful AI Adoption Strategy in 2026: A Proven Framework for CEOs and CTOs

What is LangGraph and How It Powers Multi-Agent AI Orchestration?

LangGraph adds graph-based orchestration for multi-agent workflows.

- Nodes = Agents, Edges = Communication paths

- Supports hierarchical, supervisor, or fully connected agent designs

- Uses Model Context Protocol (MCP): “USB-C for AI tools.”

This makes multi-agent AI ecosystems interoperable with external toolchains.

Practical AI Use Cases of LangChain in 2026

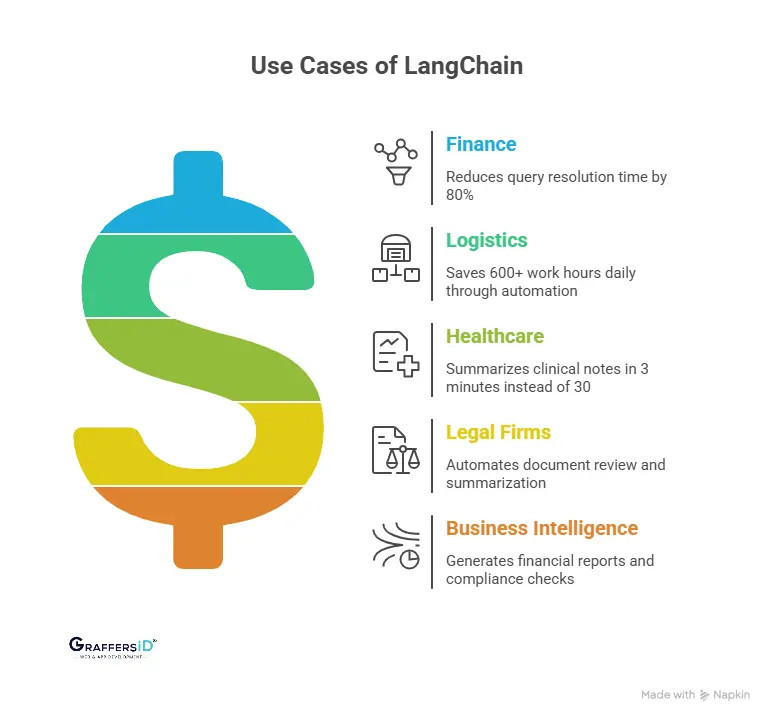

-

Finance (Klarna): Reduced customer query resolution time by 80%, improving service speed and satisfaction.

-

Logistics: Automated workflows that save 600+ work hours daily, boosting supply chain efficiency.

-

Healthcare: Summarizes clinical notes in just 3 minutes instead of 30, helping doctors save time.

-

Legal Firms: Automates document review and summarization while keeping legal terminology accurate.

-

Business Intelligence: Generates financial reports, compliance checks, and research summaries with AI assistants.

Conclusion

As businesses continue to navigate the fast-evolving digital landscape in 2026, choosing the right approach to development and AI adoption has become a defining factor in long-term growth. Businesses must prioritize strategies that balance cost-efficiency, innovation, and scalability.

The companies that stay ahead are the ones that integrate modern technologies with agile development models while maintaining transparency, security, and adaptability.

At GraffersID, we help startups and enterprises hire remote AI developers in India and build cutting-edge AI-driven solutions. If you want to accelerate your digital transformation journey, contact us today.