Large Language Models have reshaped how businesses adopt AI, but by 2026, enterprise leaders have reached a critical realization: AI is only as good as the knowledge it can reliably access.

Despite their capabilities, standalone LLMs continue to face fundamental challenges. They rely on static training data, struggle to stay current, and can generate confident yet incorrect answers. For organizations operating in regulated, data-driven, or customer-facing environments, this leads to real risks, misinformation, a lack of traceability, and limited enterprise context.

This is exactly where Retrieval-Augmented Generation (RAG) becomes a foundational AI architecture.

RAG enables AI systems to retrieve verified, up-to-date information at the moment a query is made and use that data to generate responses that are accurate, explainable, and aligned with business reality.

In this guide, we break down what Retrieval-Augmented Generation is, how it works, and why it has become essential for building scalable, reliable, and future-ready AI systems.

What is Retrieval-Augmented Generation (RAG) in AI?

Retrieval-Augmented Generation (RAG) is an AI approach that combines information retrieval with large language models to generate responses based on real, external, and up-to-date data rather than relying only on what the AI model learned during training.

Instead of generating answers from static knowledge, RAG enables AI systems to look up relevant information at the moment a question is asked and use that information to produce accurate, context-aware, and verifiable responses.

In simple terms, a RAG-based AI system works in two clear steps:

-

First, the AI retrieves relevant information from trusted data sources such as documents, databases, or internal knowledge bases.

-

Then, the AI uses the retrieved data to generate a meaningful and fact-based answer.

This process ensures that responses are grounded in real knowledge, not assumptions or outdated training data.

Read More: Small vs. Large Language Models in 2026: Key Differences, Use Cases & Choosing the Right Model

Why Retrieval-Augmented Generation is Used in Modern AI Systems?

Traditional large language models generate responses based solely on patterns learned during training. While powerful, this approach has clear limitations in real-world enterprise use.

Retrieval-Augmented Generation exists to overcome these challenges by:

-

Connecting AI models to live data sources such as company documents, APIs, internal databases, and knowledge repositories.

-

Ensuring responses are current and accurate, even when information changes frequently.

-

Providing domain-specific answers tailored to an organization’s internal data.

-

Reducing hallucinations and misinformation by grounding outputs in verified sources.

-

Improving transparency and trust which is critical for compliance-driven industries.

As AI adoption matures in 2026, RAG has become the preferred architecture for enterprises that need reliable, explainable, and business-ready AI systems rather than generic, standalone chatbots.

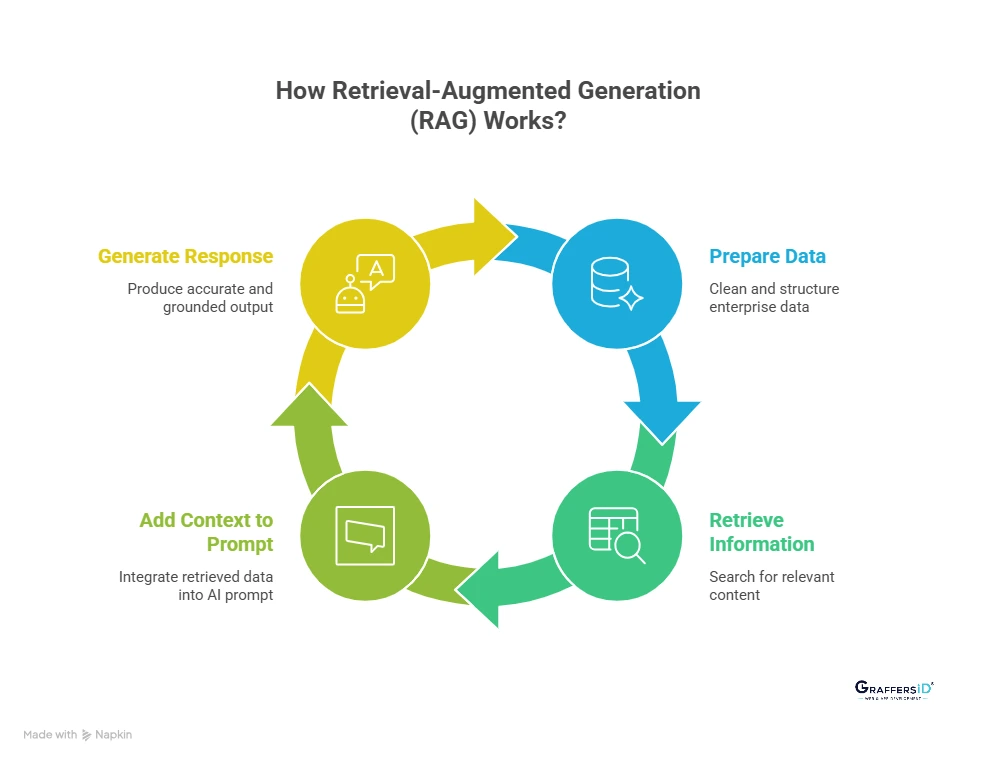

How Retrieval-Augmented Generation (RAG) Works?

1. Preparing and indexing enterprise data for AI

Before an AI system can retrieve knowledge, enterprise data must be prepared in a format it can understand.

This includes data such as:

-

PDFs and policy documents

-

Websites and help centers

-

Product manuals and technical documentation

-

CRM records and internal knowledge bases

The data is:

-

Cleaned and structured to remove noise

-

Split into smaller, meaningful chunks for better retrieval

-

Converted into vector embeddings that represent semantic meaning

This process allows AI systems to search for information based on meaning and context, not just exact keywords.

2. Retrieving relevant information when a query is made

When a user asks a question, the RAG system does not generate an answer immediately.

Instead, it:

-

Searches a vector database using semantic similarity

-

Identifies the most relevant content related to the query

-

Ranks and filters results to ensure high-quality context

This step ensures the AI has access to accurate and relevant information before generating a response.

3. Adding retrieved context to the AI prompt

The retrieved information is then added directly to the AI model’s prompt.

This gives the model:

-

Precise and up-to-date context

-

Verified source material from enterprise data

-

Domain-specific knowledge tailored to the use case

By grounding the prompt in real data, the AI is guided to produce reliable and context-aware responses.

4. Generating accurate responses using grounded knowledge

Finally, the Large Language Model generates a response using the retrieved content as its knowledge source.

As a result:

-

AI hallucinations are significantly reduced

-

Responses are more accurate and explainable

-

Outputs align with real business data and policies

This is what makes Retrieval-Augmented Generation suitable for enterprise AI applications, customer-facing systems, and decision-critical workflows in 2026.

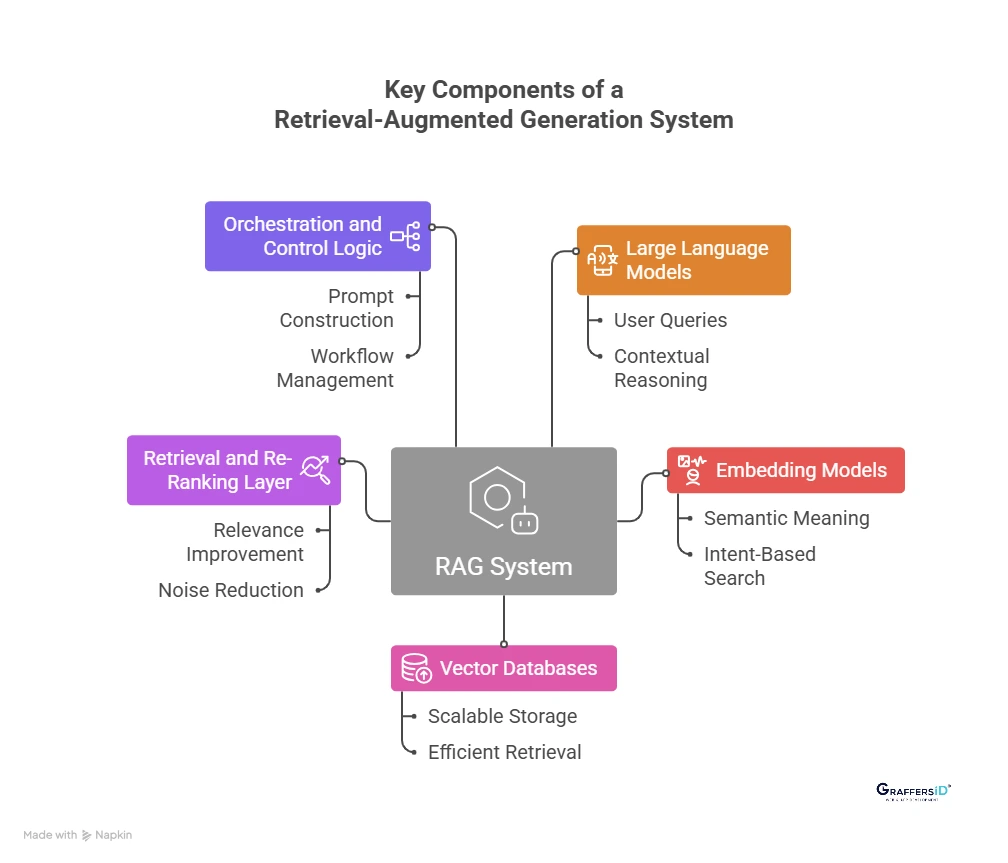

Key Components of a Retrieval-Augmented Generation (RAG) System

A Retrieval-Augmented Generation system is made up of multiple interconnected components that work together to deliver accurate, context-aware AI responses. Understanding these components helps enterprises design reliable and scalable AI architectures.

1. Large Language Models (LLMs)

Large Language Models are responsible for understanding user queries, reasoning over provided context, and generating natural language responses. In a RAG system, the LLM does not rely solely on its training data. Instead, it uses externally retrieved information to produce answers that are more accurate, current, and relevant to the business domain.

2. Embedding Models

Embedding models convert text, documents, and queries into numerical vectors that capture semantic meaning. These vectors make it possible to search data based on intent and context rather than exact keywords. High-quality embeddings are essential for accurate retrieval and play a critical role in the overall performance of RAG systems.

3. Vector Databases

Vector databases store embeddings and enable fast, scalable similarity searches across large datasets. They allow RAG systems to quickly identify the most relevant pieces of information from enterprise data such as documents, knowledge bases, and product manuals. Efficient vector storage and retrieval are key to maintaining low latency and high accuracy.

4. Retrieval and Re-Ranking Layer

The retrieval layer searches the vector database to find relevant content, while the re-ranking layer refines these results to ensure only the most useful and high-quality information is passed to the language model. This step improves answer relevance, reduces noise, and helps minimize hallucinations in AI responses.

5. Orchestration and Control Logic

Orchestration logic manages how all RAG components work together. It controls prompt construction, retrieval workflows, response formatting, and system behavior. This layer also enables monitoring, optimization, and integration with enterprise applications, ensuring the RAG system operates reliably in production environments.

Read More: What Are Multi-Agent Systems in 2026? Architecture, Benefits, and Real-World Applications

Why Enterprises Use Retrieval-Augmented Generation (RAG) in 2026?

1. More Accurate AI Responses with Fewer Hallucinations: RAG grounds AI outputs in real, verified data, significantly reducing hallucinations and improving response reliability.

2. Faster Knowledge Updates Without Model Retraining: Enterprises can update AI knowledge instantly by refreshing data sources, without retraining or redeploying large language models.

3. Better Compliance, Traceability, and Explainability: RAG enables AI responses to be traced back to original sources, supporting audits, regulatory compliance, and enterprise governance.

4. Scalable and Cost-Efficient AI Systems: By avoiding frequent fine-tuning, RAG lowers compute costs while scaling AI across teams, products, and use cases.

RAG vs. Traditional LLMs vs. Fine-Tuned Models: What Works Best for Enterprise AI in 2026?

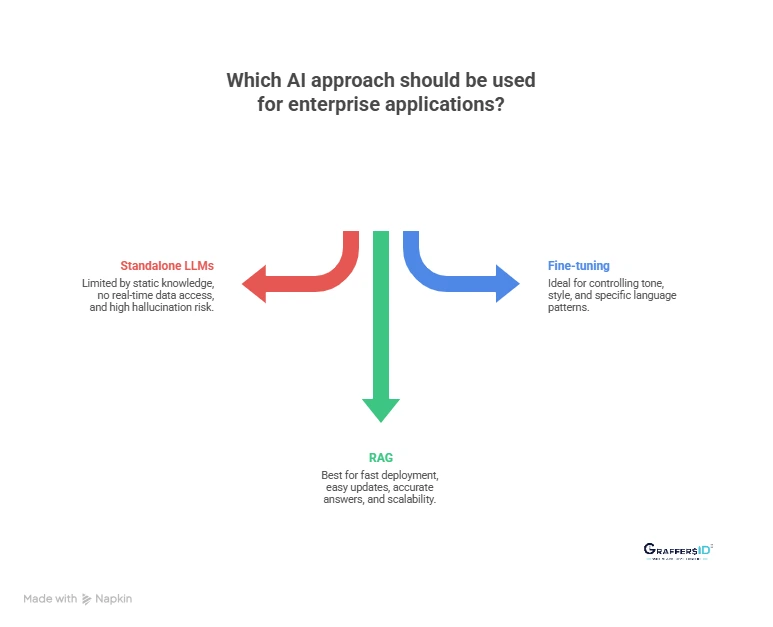

1. Why are Standalone LLMs Limited for Enterprise Use?

Standalone Large Language Models generate responses based only on what they learned during training. While powerful, this approach creates several challenges for real-world business applications:

-

Static knowledge: LLMs do not automatically update when business data, policies, or market information changes.

-

No real-time or proprietary data access: They cannot natively access internal documents, databases, or live systems without external integration.

-

High risk of hallucinations: When information is missing or unclear, LLMs may produce confident but incorrect answers, posing risks for customer support, compliance, and decision-making.

For enterprises in 2026, these limitations make standalone LLMs unsuitable for mission-critical systems.

2. RAG vs Fine-tuning: When to Use Each Approach?

Retrieval-Augmented Generation and fine-tuning solve different problems. Understanding when to use each is key to building effective AI systems.

Retrieval-Augmented Generation (RAG) is best when you need:

-

Faster AI deployment without long training cycles

-

Easy updates as data changes frequently

-

Accurate answers grounded in real business documents

-

Scalable AI systems that grow with enterprise knowledge

RAG allows organizations to change their AI’s knowledge instantly by updating data sources, without retraining the model.

Fine-tuning is better suited for:

-

Controlling tone, style, or brand voice

-

Teaching very specific language patterns

-

Narrow, stable use cases with limited data changes

Fine-tuning adjusts how a model responds, while RAG controls what information the model uses.

3. The Enterprise AI Strategy in 2026: RAG First, Fine-tuning Second

By 2026, most enterprise AI architectures follow a layered approach:

-

RAG is used as the primary knowledge layer to ensure accuracy, freshness, and traceability.

-

Fine-tuning is applied selectively to improve response quality, consistency, and domain behavior.

This combination delivers AI systems that are reliable, adaptable, and ready for real-world business use, making it the preferred strategy for modern enterprise AI deployments.

Real-World Applications of Retrieval-Augmented Generation (RAG) in 2026

1. Enterprise AI chatbots and virtual assistants: RAG enables internal AI assistants to answer employee questions using verified company policies, HR documents, finance data, and IT knowledge bases.

2. AI-powered customer support automation: Businesses use RAG to deliver accurate product support, onboarding guidance, and troubleshooting by retrieving information from real documentation in real time.

3. Legal, healthcare, and compliance AI systems: RAG helps professionals access case laws, medical references, and regulatory guidelines with traceable, source-backed AI responses.

4. E-commerce search and personalization engines: Retail platforms use RAG to improve product discovery and recommendations by grounding AI responses in live catalogs and customer data.

5. Developer productivity and code intelligence tools: RAG-powered tools assist developers with code search, API references, and documentation-aware AI suggestions inside development workflows.

Read More: AI Assistants vs. AI Agents (2026): Key Differences, Features, and Use Cases Explained

Key Technical Factors to Consider When Building RAG Systems

1. Data quality and chunking strategy: High-quality, well-structured data with the right chunk size and metadata is essential for accurate retrieval and reliable AI responses.

2. Security and access control: Enterprise RAG systems must enforce strict authentication, authorization, and data access rules to protect sensitive and proprietary information.

3. Latency and performance optimization: Production-grade RAG systems require a balance between fast retrieval times and response accuracy to deliver real-time user experiences.

4. Monitoring and evaluation: Continuous monitoring of retrieval relevance and output quality is necessary to maintain accuracy and improve system performance over time.

Advanced RAG Trends to Watch in 2026

1. Agentic RAG for autonomous enterprise AI: Agentic RAG systems combine retrieval with AI agents that can perform multi-step reasoning, use multiple tools, and take autonomous actions across enterprise workflows.

2. Multi-modal RAG for richer AI understanding: Multi-modal RAG enables AI systems to retrieve and reason across text, images, audio, and video, delivering more context-aware and human-like responses.

3. Real-time RAG with live enterprise data: Real-time and streaming RAG connects AI models to live APIs, dashboards, and event streams, ensuring responses are always based on the latest available data.

4. Hybrid search RAG for higher retrieval accuracy: Hybrid RAG approaches combine keyword-based search with semantic vector search to improve relevance, precision, and answer quality across large datasets.

Read More: 5 Best AI Frameworks and Libraries in 2026 Trusted by Leading Tech Companies

Popular Tools and Frameworks Used to Build RAG Systems in 2026

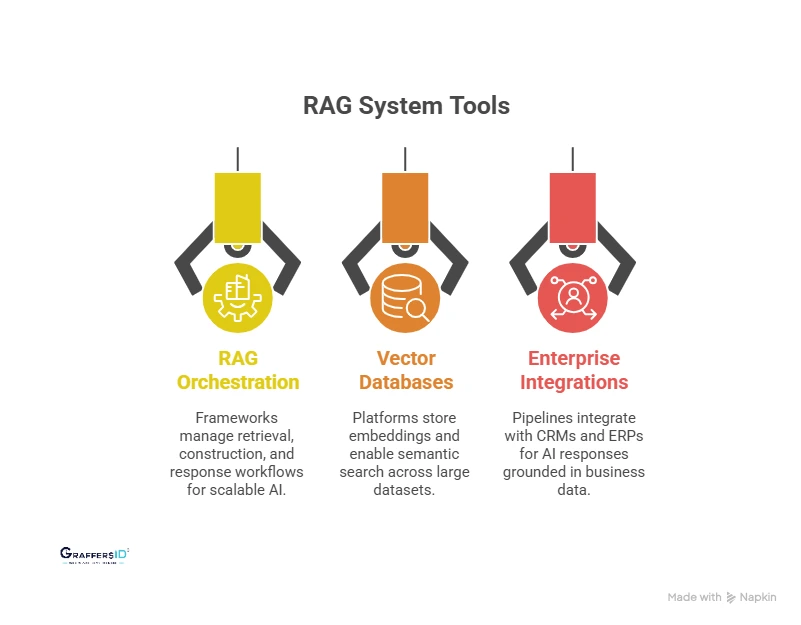

1. RAG orchestration frameworks

RAG orchestration frameworks manage document retrieval, prompt construction, and response workflows, enabling scalable and production-ready AI applications.

2. Vector database platforms

Vector databases store embeddings and enable fast, high-accuracy semantic search across large enterprise datasets used by RAG systems.

3. Enterprise system integrations

RAG pipelines integrate with CRMs, ERPs, and internal tools to deliver AI responses grounded in real-time and proprietary business data.

How to Implement RAG Successfully in Enterprises in 2026?

1. Define a clear business problem: Focus on a specific, measurable use case that RAG can solve, rather than using it just to showcase AI capabilities.

2. Prepare high-quality data: Well-structured, clean, and relevant data is essential for accurate retrieval and reliable AI responses.

3. Continuously monitor and improve: Regularly evaluate retrieval accuracy, response quality, and user feedback to optimize system performance over time.

Conclusion: Why RAG Is a Key Enterprise AI Strategy in 2026

By 2026, enterprises are no longer debating whether to adopt AI; they are focused on making AI reliable, scalable, and aligned with business needs. Retrieval-Augmented Generation (RAG) addresses this by combining the reasoning power of Large Language Models with real-world, up-to-date knowledge, enabling businesses to build AI systems that are accurate, trustworthy, and future-ready.

Whether your goal is enhancing AI chatbots, improving enterprise search, automating workflows, or creating intelligent platforms, RAG ensures your AI solutions deliver higher accuracy, better trust, faster deployment, and long-term scalability.

At GraffersID, we help organizations transform their operations with cutting-edge AI solutions and services, from intelligent automation to custom AI applications.

Partner with GraffersID to build scalable, intelligent, and future-ready AI systems that give your business a competitive edge in 2026 and beyond.