As enterprises accelerate digital transformation in 2026, AI assistants and agentic AI systems are no longer optional; they’re business-critical. From HR automation to client onboarding, companies are embedding AI into everyday workflows.

The challenge for CTOs and tech leaders isn’t adopting AI; it’s building systems that are private, secure, and tailored to their organization’s data and processes. This is where AnythingLLM has emerged as a game-changing framework.

For technology executives evaluating how to create scalable AI assistants or autonomous agents without vendor lock-in, this guide breaks down what AnythingLLM is, how it works, and why it’s one of the most flexible open-source AI frameworks in 2026.

What is AnythingLLM in 2026?

AnythingLLM is an open-source, developer-centric platform that enables companies to build powerful AI agents and assistants by integrating advanced Large Language Models (LLMs) like Claude, LLaMA, or GPT-4 with their own organizational data.

Unlike SaaS platforms such as ChatGPT Enterprise or Microsoft Copilot, AnythingLLM is designed for control, flexibility, and enterprise-grade security:

✅ Choose your own LLM provider (cloud or local hosting)

✅ Control which data sources feed the AI

✅ Deploy in your own environment (cloud, on-prem, or hybrid)

It acts as a bridge between your proprietary knowledge base and modern AI models, giving enterprises the power to build custom AI systems with zero data leakage risk.

Read More: What Are LLMs? Benefits, Use Cases, & Top Models in 2026

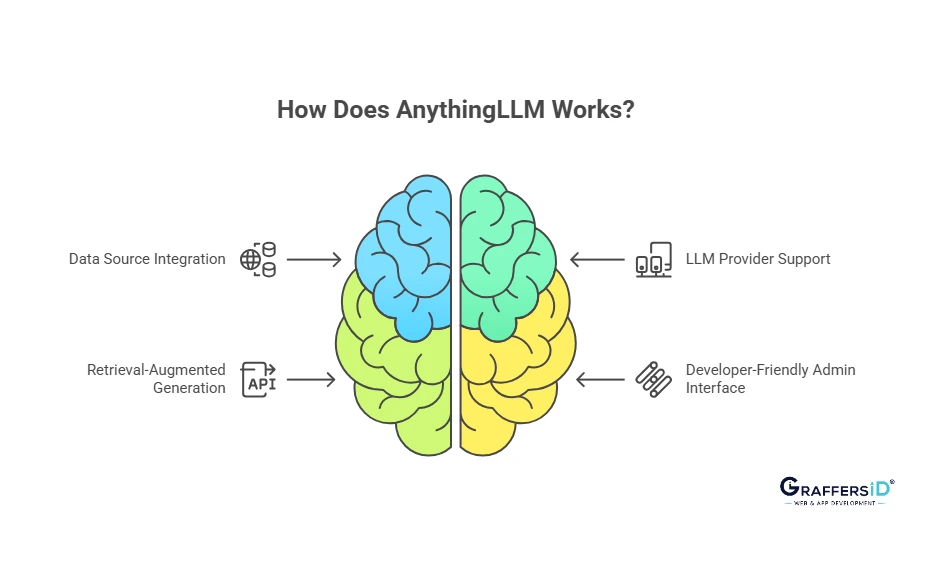

How Does AnythingLLM Work?

Unlike complex ML stacks, AnythingLLM is modular and developer-friendly, making it ideal for mid-sized teams, startups, and enterprises working with external AI developers or development partners like GraffersID.

1. Data Source Integration

AnythingLLM has built-in connectors that support a large number of enterprise data sources. These consist of:

- Notion pages

- Google Drive and Docs

- GitHub repositories

- Slack channels

- PDF documents

- Markdown files

- JSON/CSV/HTML

Teams can enter both structured and unstructured data using the ingestion layer. The data is then processed using chunking methods in order to get it ready for vector embedding. This ensures that every document, including product wikis and legal PDFs, becomes a searchable, contextually aware component of your assistant’s knowledge.

2. Multi-Model LLM Provider Support

Unlike closed platforms, AnythingLLM is LLM-agnostic. It supports:

- OpenAI (GPT-4, GPT-3.5)

- Anthropic Claude 2/3

- Cohere, Mistral, Google Gemini

- Local models via Ollama: LLaMA 3, Mixtral, TinyLlama, and more

CTOs can choose the right provider based on cost, latency, model capability, or data privacy policies.

3. Retrieval-Augmented Generation (RAG)

At the heart of AnythingLLM is its RAG pipeline, a technique where AI responses are enhanced by retrieving relevant data from your own documents before the model generates a reply.

This ensures:

- Higher accuracy

- Deep personalization

- Data-backed answers

The tool uses open-source vector databases like ChromaDB, Weaviate, or Qdrant to store and retrieve embedded document chunks for response enhancement.

4. Developer-Friendly Admin Interface

AnythingLLM comes with a polished, intuitive admin interface built in React. Through it, developers can:

- Create and manage assistants

- Assign specific data sources to each assistant

- Configure LLM API keys and providers

- Monitor usage and team access

- Export conversation history

This makes it easy to scale AI usage across departments without needing ML engineers on staff.

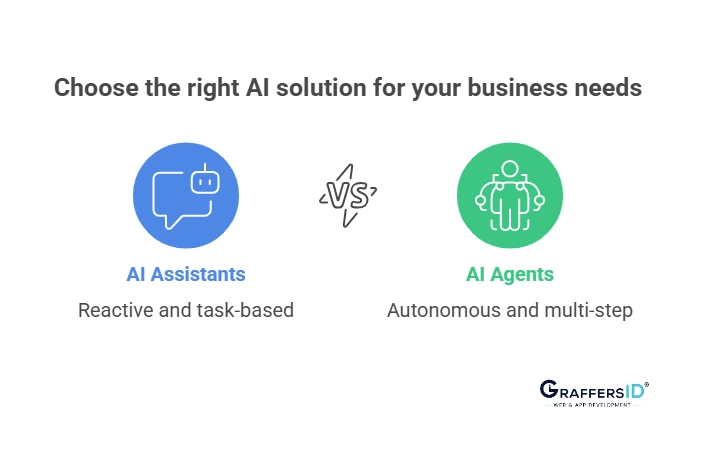

AI Assistants vs. AI Agents: What CTOs Need to Know in 2026?

It’s important to understand the distinction between AI assistants and AI agents, as AnythingLLM can serve both roles:

AI Assistants

- Reactive in nature

- Respond to user input with factual or task-based outputs

- Example: Helpdesk chatbot that answers company policy questions

AI Agents

- Autonomous reasoning and decision-making capabilities

- Perform multi-step actions (e.g., plan, analyze, summarize, generate)

- Often integrated with APIs, databases, or tools to perform tasks

With the right architecture and prompts, AnythingLLM allows you to build both, from simple assistants answering HR queries to complex agents coordinating tasks across project management systems.

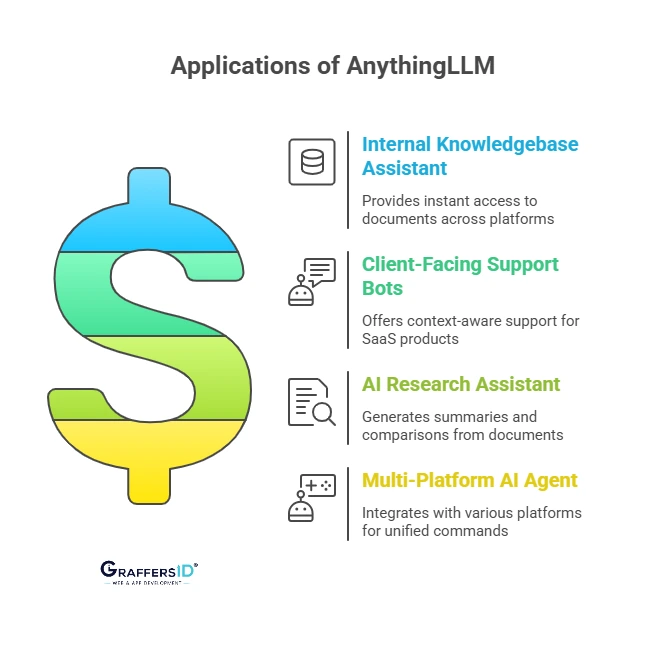

Enterprise Use Cases of AnythingLLM in 2026

1. Internal Knowledge Base AI

Imagine a new hire in engineering asking, “Where is the latest version of the API architecture?”

Instead of digging through Notion or Slack, they ask an AI assistant built with AnythingLLM, which references tagged documents across GitHub, Notion, and Google Drive to deliver the right file instantly.

This is possible through vector search and retrieval built into AnythingLLM.

2. Client Support Bots

For SaaS companies, AnythingLLM can power a custom support bot trained on product documentation, FAQs, and changelogs.

It can handle product onboarding, bug queries, or feature suggestions, all with context awareness that generic chatbots can’t match.

3. Research Assistants

Legal, finance, and consulting firms can use AnythingLLM to parse hundreds of internal documents and generate:

- Executive summaries

- Regulation comparisons

- Contract clause highlights

This saves days of manual work and improves research efficiency.

4. Cross-Platform Agents

Using built-in APIs or webhooks, your AI assistant can integrate with Slack, Trello, JIRA, or your custom app, giving users a unified command interface across multiple platforms, powered by internal company knowledge.

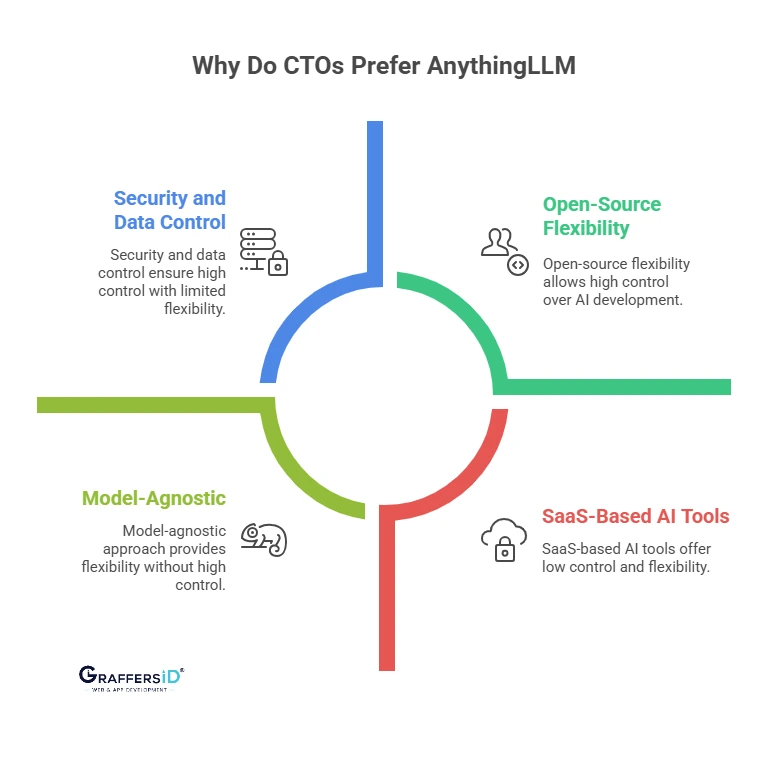

Why CTOs Prefer AnythingLLM for Private AI Development?

1. Security & Compliance

Unlike SaaS-based AI tools, AnythingLLM gives complete control over hosting, data ingestion, and model interaction. Whether you want to host it on AWS, Azure, or a private server, it’s your call.

2. Open-Source Flexibility

Your development team can extend the platform:

- Add new connectors

- Modify agent behavior

- Build custom UI integrations

This fits well into existing product roadmaps and reduces vendor lock-in.

3. Remote Team Friendly

AnythingLLM can be adopted easily by external developers or staff augmentation partners like GraffersID. With Docker support, API docs, and prebuilt interfaces, ramp-up time is minimal.

4. Model Agnostic

You’re not tied to a single provider. Want to use GPT-4 now and switch to Claude next quarter? You can, without rearchitecting your entire system.

Read More: What is n8n? Features, Use Cases & Pricing in 2026

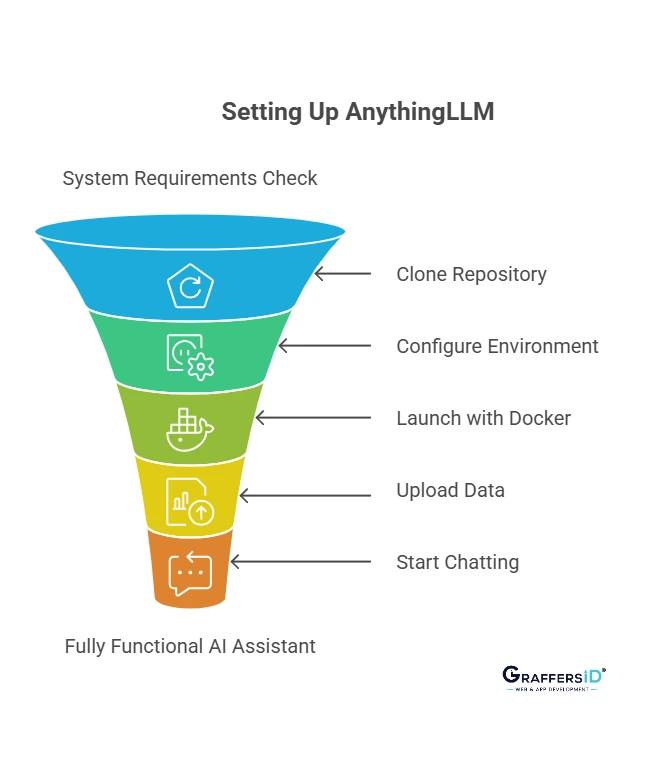

How to Set Up AnythingLLM? (2026 Guide)

Setting up AnythingLLM is very simple, even if you’re not running a large AI infrastructure. It’s built to be developer-friendly, with minimal dependencies and flexible deployment options. Here’s how to do it.

Step 1: System Requirements

Before you begin, make sure your environment has:

- Docker & Docker Compose (preferred for quick setup)

- Minimum 8GB RAM, though 16GB+ is recommended for smoother performance

- Access to an LLM API (like OpenAI GPT-4 or Anthropic Claude)

- Optional: GPU (for local LLMs like LLaMA via Ollama)

Step 2: Clone the AnythingLLM Repository

Start by cloning the official GitHub repository:

git clone https://github.com/Mintplex-Labs/anything-llm.git

cd anything-llm

This will give you access to the full codebase, environment configs, and Docker setup files.

Step 3: Configure Environment Variables

Rename the .env.template file to .env:

cp .env.template .env

Open it and configure it:

- Your OpenAI API key (or another model provider like Anthropic, Cohere, etc.)

- Optional: vector database settings (defaults to ChromaDB)

- Port settings, storage paths, or external integrations (Slack, Notion, etc.)

Step 4: Launch with Docker Compose

To start the app using Docker Compose:

docker-compose up –build -d

This will:

- Launch the server

- Start the front-end dashboard

- Set up vector DB and background workers

Once running, visit “http://localhost:3001” in your browser to access the UI.

Step 5: Upload or Connect Your Data Sources

From the dashboard:

- Create your first AI Assistant

- Upload PDFs, connect Notion, import Slack messages, or add GitHub repos

- Choose the LLM your assistant should use (e.g., GPT-4)

The system will automatically chunk, embed, and store your content for retrieval.

Step 6: Deploy & Scale

Now, you can chat with your assistant directly from the dashboard. It will respond using retrieval-augmented generation (RAG), based on your documents.

You can also:

- Export conversations

- Add users/roles

- Embed assistants into your apps via APIs

Next Steps:

Once the assistant is stable:

- Add more data sources

- Customize its behavior via prompts

- Integrate into Slack, web apps, or CRMs

Conclusion

In 2026, AnythingLLM is one of the most powerful frameworks for building private, enterprise-ready AI agents. Unlike SaaS-based AI assistants, it puts CTOs in control of data, models, and infrastructure.

With the right AI development partner like GraffersID, enterprises can go beyond off-the-shelf chatbots and build custom AI assistants, semi-autonomous agents, and enterprise-grade workflows, fully aligned with security, compliance, and long-term digital strategy.

Want to develop AI assistants or agents tailored to your business?

GraffersID provides experienced AI developers who can safely, efficiently, and dependably integrate frameworks like AnythingLLM into your platform.

Hire AI Developers from India to bring your AI vision into reality in 2026.