In 2026, enterprises are outgrowing traditional chatbots, rule-based automation, and single-modality AI systems. The new competitive edge lies in intelligent systems that can see, hear, read, reason, and act simultaneously, bridging the gap between digital workflows and human-like understanding.

This shift has given rise to multimodal AI agents: autonomous systems that process text, images, audio, video, documents, and sensor data together to deliver deeper insights, faster decisions, and fully automated business operations.

For CTOs, CEOs, product leaders, and innovation teams, multimodal agents represent far more than another AI upgrade. They enable true end-to-end automation, context-aware decisions, and AI-driven workflows that operate across channels, departments, and customer touchpoints.

As enterprise environments become more data-rich and operationally complex, these agents are quickly becoming the backbone of smart operations, predictive intelligence, and hyper-personalized customer experiences.

In this 2026 guide, you’ll learn:

-

What multimodal AI agents are

-

How they work behind the scenes

-

Their core capabilities

-

Enterprise-grade benefits

-

High-value use cases across industries

-

The future of multimodal systems for modern businesses

Let’s explore how these next-gen AI agents are reshaping the enterprise landscape.

What Are Multimodal AI Agents?

Multimodal AI agents are AI systems that can process and respond using multiple types of data simultaneously, such as text, images, audio, video, and sensor readings.

Read More: What is Multimodal AI in 2025? Definition, Examples, Benefits & Real-World Applications

They go beyond chatbots or unimodal LLMs by using combined signals to interpret context and act intelligently. For example:

-

Reading an instruction

-

Analyzing an uploaded image

-

Listening to a voice command

-

Observing a video feed

-

Accessing APIs and tools

And then completing the task autonomously.

In 2026, multimodal agents are widely used in enterprises for operations, automation, customer support, compliance, and intelligent decision-making.

How Multimodal AI Agents Work?

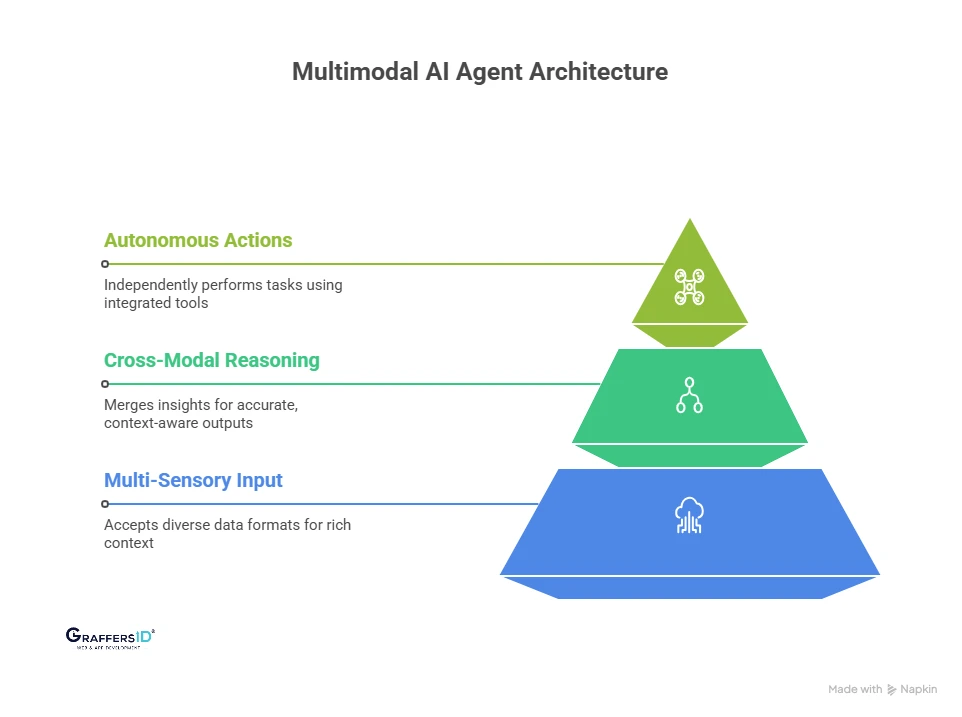

Multimodal AI agents operate using three core components that allow them to perceive, reason, and take action.

1. Multi-Sensory Input Processing

These agents accept inputs from multiple formats, enabling them to gather richer context than text-only AI systems.

-

Text & documents: instructions, emails, PDFs, reports

-

Audio/voice: commands, customer calls

-

Images/screenshots: visual issues, UI errors, product photos

-

Videos: surveillance, workflows, quality checks

-

IoT/sensor data: machine performance, environmental readings

2. Cross-Modal Reasoning

They merge insights from all input types to understand what is happening and why.

Example: A maintenance agent compares vibration sensor data + live video footage to predict machine failure and recommend action.

This combined reasoning makes the outputs more accurate and context-aware.

3. Autonomous Actions & Tool Execution

Once the agent understands the task, it can independently perform actions using integrated tools or APIs.

-

Generating reports or summaries

-

Updating CRMs or internal systems

-

Sending alerts or notifications

-

Processing customer requests

-

Executing multi-step workflows

This full cycle—perception → reasoning → action—is what makes multimodal agents significantly more powerful and reliable than traditional AI systems in enterprise environments.

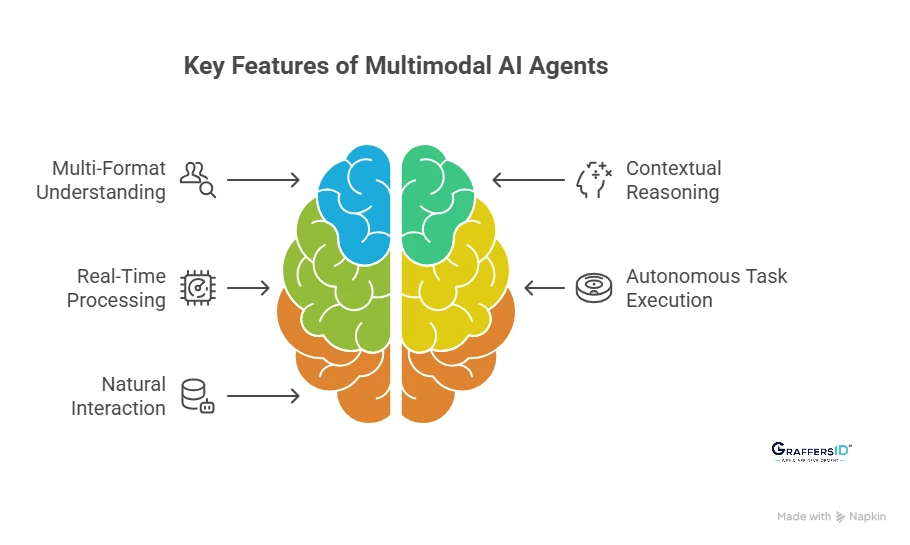

Key Features of Multimodal AI Agents in 2026

1. Multi-Format Understanding

Multimodal agents can process text, voice, images, videos, and documents together, just like humans combine multiple senses. This helps them understand complex queries or situations more accurately than single-modality AI.

2. Contextual Reasoning Across Different Data Types

These agents don’t just read or see data; they connect the dots. For example, they can match a customer’s audio complaint with a product photo or detect issues by comparing written instructions with video footage.

3. Real-Time Processing and Live Insights

They can analyze inputs from live cameras, audio streams, dashboards, or IoT sensors in real time. This makes them ideal for security monitoring, manufacturing, logistics, and any environment where instant decisions matter.

4. Autonomous Task Execution Using Tools and APIs

Multimodal agents can take actions independently, booking appointments, updating CRMs, generating reports, triggering workflows, or interacting with enterprise tools via APIs. This pushes automation beyond simple chatbot responses.

5. Natural and Flexible Human-Like Interaction

Teams can interact with these agents through voice notes, screenshots, PDFs, handwritten notes, or mixed inputs. This removes friction and makes AI adoption easier for non-technical users across the enterprise.

Benefits of Multimodal AI Agents for Modern Enterprises (2026)

1. Higher Accuracy With Better Context Understanding: Multimodal agents process text, images, audio, and video together, giving them a deeper understanding of situations. This improves prediction quality, reduces errors, and delivers more reliable decisions across workflows.

2. End-to-End Automation Without Multiple Tools: Instead of relying on separate systems for OCR, transcription, image analysis, and text processing, a multimodal agent performs all tasks in one flow. This enables smooth automation across processes that previously required manual intervention or multiple integrations.

3. Faster and More Personalized Customer Experience: Customers can explain issues using screenshots, chat messages, voice notes, or videos, whichever feels natural. Multimodal agents interpret all formats and respond accurately, reducing resolution time and improving satisfaction.

4. Lower Operational Costs Across the Tech Stack: By replacing multiple siloed AI tools with one unified multimodal system, companies save on licensing, integration, training, and maintenance. This consolidation reduces overhead while improving system performance.

5. Easy Scalability Across Departments and Teams: A single multimodal agent can be deployed across operations, marketing, support, engineering, HR, and compliance. This creates consistency, reduces onboarding time, and ensures every team benefits from the same intelligent automation.

Enterprise Use Cases of Multimodal AI Agents in 2026

1. Smarter Customer Support Automation

Multimodal AI agents analyze screenshots, app errors, voice queries, and PDFs together to resolve customer issues instantly. They identify problems, suggest fixes, and auto-create support tickets without human intervention.

2. AI-Assisted Healthcare Diagnostics

Agents combine medical images, doctor notes, teleconsultation audio, and patient records to support faster and more accurate diagnostics. They help clinicians prepare reports, detect anomalies, and streamline care workflows.

3. Manufacturing, Plant Monitoring, and Predictive Operations

Multimodal models interpret live camera feeds, thermal scans, sensor data, and machine sounds to detect defects and predict equipment failures. They enhance safety, reduce downtime, and improve overall plant productivity.

4. Retail and E-Commerce Personalization & Automation

These agents enable image-based product search, real-time shelf monitoring, fraud detection, and personalized recommendations. Shoppers can “snap and search,” while retailers optimize inventory and reduce operational gaps.

5. Education, Training, and Student Engagement

Multimodal agents assess facial expressions, voice tone, assignments, and written responses to personalize learning. They help educators identify learning gaps and deliver adaptive content based on student performance.

Read More: What Are Multi-Agent Systems in 2025? Architecture, Benefits, and Real-World Applications

Challenges and Limitations of Multimodal AI Agents in 2026

1. High Computing and Deployment Costs: Processing text, images, video, and audio together demands powerful GPUs and optimized infrastructure, increasing implementation costs.

2. Data Privacy and Security Risks: Handling sensitive video, audio, and document data requires strict compliance with privacy regulations and enterprise governance policies.

3. Complex System Integration: Integrating multimodal AI agents into existing workflows and legacy systems can be challenging and may require process redesign.

4. Maintaining Accuracy Across Modalities: Ensuring consistent performance across speech, vision, and text inputs is difficult, especially in dynamic, real-world enterprise environments.

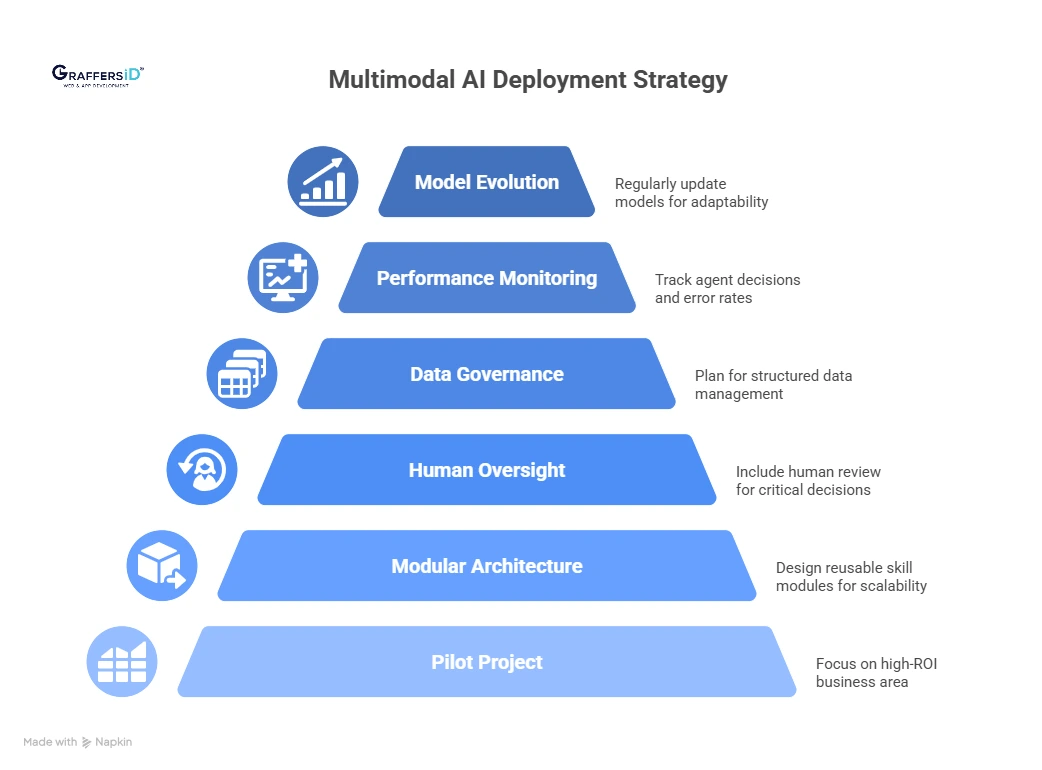

How to Successfully Deploy Multimodal AI Agents in Your Enterprise in 2026?

1. Start With a High-Impact Pilot Project

Focus on one business area where multimodal AI delivers the highest ROI, such as customer support, predictive maintenance, or operational workflows. Starting small ensures faster wins and measurable results.

2. Build a Modular and Scalable Architecture

Design agents with independent, reusable skill modules. This allows enterprises to scale capabilities, add new modalities, and integrate smoothly with existing systems without overhauling the entire AI infrastructure.

3. Include Human-in-the-Loop for Critical Decisions

Ensure key workflows include human oversight. Multimodal agents can automate routine tasks, but critical decisions, especially those affecting customers or compliance, should pass through human review for accuracy and accountability.

4. Implement a Robust Multimodal Data Governance Framework

Plan for structured data management from day one:

-

High-quality data labeling for all modalities

-

Privacy and consent controls to protect sensitive information

-

Security measures to prevent breaches

-

Compliance with industry regulations such as GDPR or HIPAA

5. Monitor Agent Performance Continuously

Use dashboards and analytics tools to track agent decisions, error rates, and tool interactions. Continuous monitoring ensures consistent accuracy, identifies anomalies early, and supports iterative improvements.

6. Continuously Update and Evolve Models

Enterprise environments change rapidly. Regularly retrain and fine-tune multimodal agents to adapt to new data sources, operational processes, and customer behaviors, keeping your AI relevant and effective.

Conclusion: Why Enterprises Must Adopt Multimodal AI Agents in 2026

Multimodal AI agents are no longer a futuristic concept; they are redefining enterprise operations, decision-making, and customer experiences. By smoothly integrating text, audio, images, video, and real-time sensor data, these agents deliver unmatched accuracy, autonomous workflows, and actionable insights across departments.

For CTOs, CEOs, and product leaders, the takeaway is clear: 2026 is the year to implement multimodal AI agents to build intelligent, future-ready workflows that scale efficiently, reduce operational complexity, and enhance competitive advantage.

At GraffersID, we help global companies build: AI-driven automation systems, custom AI solutions, web/app development, and provide dedicated remote AI developers.

Ready to bring artificial intelligence to your business? Contact GraffersID today and transform your workflows with next-gen AI solutions.