In 2026, the real challenge for tech leaders isn’t whether to use AI; it’s choosing the right type of model. Should you invest in Large Language Models (LLMs) like GPT-4 or Gemini 1.5, known for their deep reasoning but heavy infrastructure costs? Or are Small Language Models (SLMs) such as Mistral 7B and Microsoft Phi-3 the smarter choice, offering lightweight performance and domain-specific precision on edge devices?

This blog breaks down SLMs vs. LLMs, key differences, advantages, limitations, and business use cases, so you can make the right decision for your business in 2026.

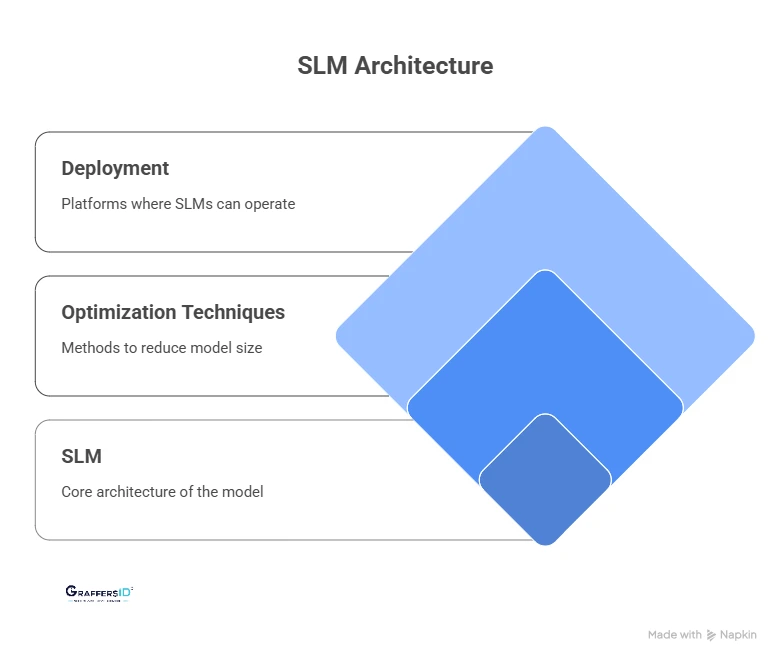

What is a Small Language Model (SLM)?

A Small Language Model (SLM) is a compact AI model designed to understand and generate natural language using fewer parameters, ranging from millions to a few billion. Unlike LLMs, SLMs are optimized for efficiency, speed, and domain-specific accuracy.

Key Features of SLMs in 2026

- Built on transformer architecture, similar to LLMs but scaled down.

- Uses knowledge distillation, pruning, and quantization to stay lightweight.

- Optimized for low memory usage, fast inference, and on-device deployment.

- Runs on edge devices, local servers, or mobile hardware without requiring massive GPUs.

Read More: ChatGPT vs. DeepSeek vs. Google Gemini: Which AI Model is Best for Developers in 2026?

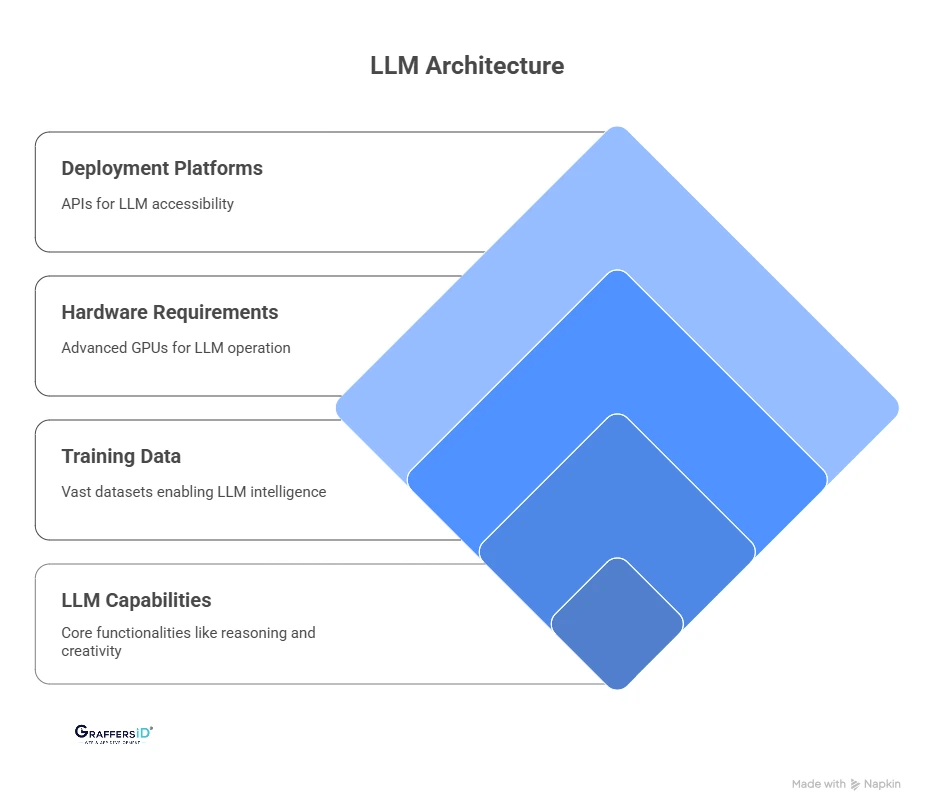

What is a Large Language Model (LLM)?

A Large Language Model (LLM) is a massive AI system trained on trillions of tokens with billions to trillions of parameters. These models excel at general-purpose reasoning, problem-solving, and content generation across diverse domains.

Key Features of LLMs in 2026

- Trained on huge datasets, enabling cross-domain intelligence.

- Requires advanced GPUs (e.g., NVIDIA A100/H100 clusters) and large-scale cloud infrastructure.

- Often deployed via APIs from providers like OpenAI, Anthropic, and Google DeepMind.

- Known for handling complex reasoning, creativity, and multilingual tasks.

Key Differences: SLM vs. LLM in 2026

Here’s a quick comparison:

| Feature | Small Language Models (SLMs) | Large Language Models (LLMs) |

| Model Size / Parameters | 2B–7B (e.g., Mistral 7B, Phi-3) | Up to 1.7T (e.g., GPT-4, Gemini 1.5) |

| Inference Speed | Ultra-fast, low-latency responses | Slower, often cloud-dependent |

| Deployment Options | Edge devices, mobile apps, on-prem servers | Cloud APIs, enterprise-scale servers |

| Memory Requirements | 1–10 GB (lightweight hardware-friendly) | 100+ GB (requires advanced infrastructure) |

| Cost Efficiency | 50–75% cheaper to run | Can cost millions in training + operations |

| Energy Usage | 50–100 kWh/month | 500–1000 kWh/month |

| Customization | Easy fine-tuning with proprietary datasets | Complex fine-tuning, requires expert teams |

| Best Use Cases | Task-specific AI, personalized apps, embedded solutions | Broad reasoning, generative AI, enterprise automation |

| Data Privacy | On-premise deployments with high data control | Higher API exposure and third-party risks |

Quick Takeaway

- SLMs are lightweight, affordable, privacy-first, and task-specific.

- LLMs are powerful, resource-heavy, and ideal for enterprise-scale reasoning and generative AI.

Why Choosing the Right AI Model Size Matters in 2026?

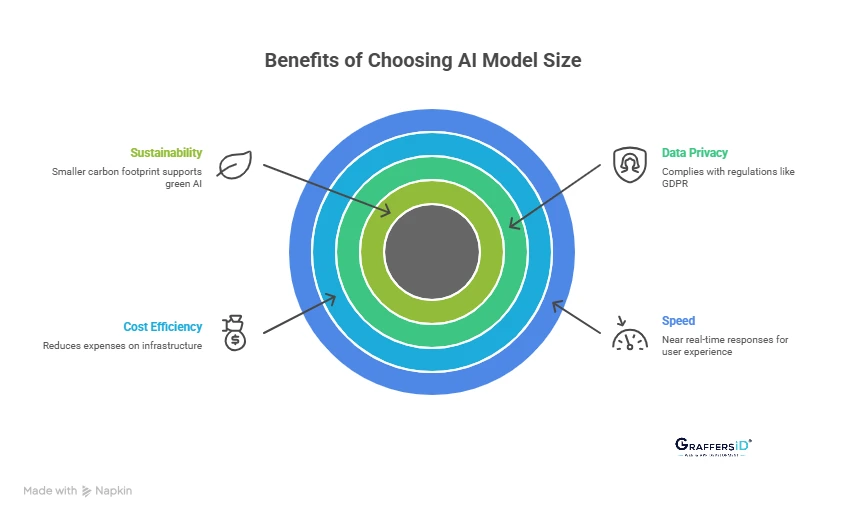

The choice between Small Language Models (SLMs) and Large Language Models (LLMs) directly impacts performance, cost, and compliance. Here’s why model size is a critical factor for businesses in 2026:

- Speed and User Experience: SLMs deliver near real-time responses, making them perfect for mobile apps, AI assistants, and edge-based applications where latency must stay under milliseconds.

- Cost and Infrastructure Efficiency: Running SLMs can cut GPU, cloud, and energy expenses by up to 75%, helping enterprises scale AI adoption without overspending.

- Data Privacy and Compliance: On-premise or private SLM deployments align more easily with regulations like GDPR, HIPAA, and the EU AI Act, ensuring secure and compliant AI operations.

- Sustainability and Environmental Impact: SLMs leave a smaller carbon footprint compared to resource-intensive LLMs, supporting green AI strategies and eco-conscious innovation.

How Are SLMs and LLMs Trained in 2026?

Large Language Models (LLMs)

- Trained on trillions of tokens and requires massive compute power.

- Example: GPT-4 was trained using 25,000+ NVIDIA A100 GPUs, making LLM training accessible only to tech giants and research labs.

Small Language Models (SLMs)

- Can be fine-tuned with proprietary datasets ranging from thousands to millions of tokens, making them highly adaptable for specific industries and domains.

- This flexibility allows startups and enterprises to create custom AI solutions without billion-dollar budgets.

Read More: Agentic AI vs. Generative AI: Key Differences CTOs Must Know in 2026

SLM vs. LLM: Key Features Comparison (2026)

1. Real-World Performance: Inference & Latency

- Small Language Models (SLMs): Run locally with faster Time to First Token (TTFT), ideal for offline and low-latency applications.

- Large Language Models (LLMs): Cloud-dependent, with higher latency caused by network and compute overhead.

Use Cases: SLM vs. LLM

- SLMs: Smart IoT devices, on-device healthcare diagnostics, and financial compliance tools.

- LLMs: Market research reports, AI-powered coding copilots, and global knowledge management systems.

2. Cost & Resource Considerations

- LLMs: Training can exceed $5M+, requiring specialized GPUs and large-scale cloud infrastructure for daily operations.

- SLMs: Fine-tuning possible on just 1–4 GPUs, with up to 75% lower costs and easier scaling.

Trend in 2026: Startups and SMEs prefer SLMs for affordability, while enterprises adopt a hybrid approach (LLM + SLM) to balance scale with cost-efficiency.

3. Security & Compliance

- SLMs: Can run fully on-premises, ensuring data sovereignty, privacy, and regulatory compliance.

- LLMs: Operate through APIs, creating higher risks of data leaks, prompt injections, and third-party dependencies.

Industry Preference: Highly regulated sectors like healthcare, fintech, and legal industries lean toward SLMs for security-first deployments, while LLMs remain popular for innovation-heavy tasks.

Best Use Cases of SLMs vs. LLMs in 2026

When to Use Small Language Models (SLMs)?

- Industry-specific chatbots (healthcare, legal, finance).

- Internal enterprise productivity tools.

- AI assistants for regulated sectors where data privacy is critical.

- Real-time mobile and IoT applications.

Read More: Advancements in Natural Language Processing (NLP) in 2026: Latest Trends & Tools

When to Use Large Language Models (LLMs)?

- Content generation at scale (blogs, marketing, storytelling).

- Enterprise-grade analytics and decision-making.

- AI coding assistants and software development support.

- Complex research tasks in science, law, or finance.

- Multilingual translation and cross-border communication.

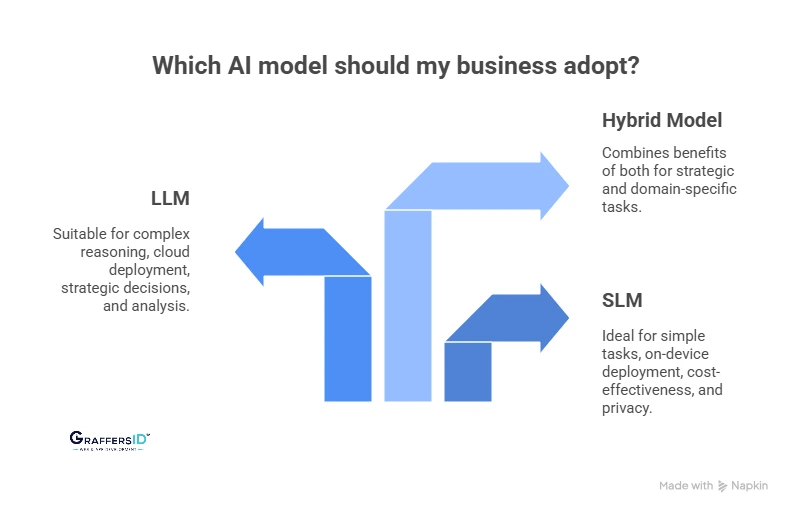

How to Choose the Right AI Model for Your Business in 2026? SLM vs. LLM

When deciding whether to adopt a Small Language Model (SLM) or a Large Language Model (LLM), focus on your business goals and technical requirements. Here are the key questions to ask:

- Task Complexity: Use SLM for simple tasks, LLM for complex reasoning.

- Deployment: SLM works on-device or on-premises; LLM requires cloud.

- Budget: SLM is cost-effective; LLM needs a high compute investment.

- Compliance: SLM keeps data private; LLM may need external handling.

- Latency: SLM delivers instant responses; LLM is slower for large tasks.

In 2026, most enterprises adopt a hybrid AI model:

- LLMs for strategic decisions, analysis, and deep reasoning.

- SLMs for speed, privacy, and frequent domain-specific tasks.

Conclusion: SLM vs. LLM

In 2026, the choice between Small Language Models (SLMs) and Large Language Models (LLMs) isn’t about which one is better, but rather about choosing the right fit for your business goals.

SLMs excel in cost-efficiency, speed, privacy-focused deployments, and edge computing, while LLMs dominate when enterprises need advanced reasoning, creativity, and large-scale automation. Choose both if your business spans complex enterprise needs and real-time AI interactions.

The key takeaway? The future belongs to companies that match the right model to the right problem, ensuring both agility and scalability in their AI adoption roadmap.

At GraffersID, we help businesses adopt AI strategically, from building custom SLM solutions to deploying LLM-powered enterprise apps.

Need help choosing the right AI model or hiring expert AI developers? Hire expert AI developers at GraffersID and stay ahead of the AI curve.