The year 2025 marks a pivotal shift in the adoption of AI assistants. In 2026, AI assistants are embedded into enterprise workflows, SaaS platforms, compliance systems, and internal automation stacks.

For CTOs and product heads, the conversation has evolved. It’s no longer, “Should we implement AI?”

It’s “Which large language model (LLM) is secure, scalable, compliant, and reliable enough for enterprise deployment?”

As organizations move beyond experimentation, they are prioritizing deep reasoning, long-context document processing, governance-ready AI systems, privacy-first architecture, and stable performance at scale.

This is where Claude AI by Anthropic has positioned itself as a serious enterprise contender in 2026. In this comprehensive guide, you’ll learn:

-

What Claude AI is and how it works

-

Differences between Claude 3.5 Sonnet and Claude Opus

-

Claude AI pricing and deployment options

-

Real-world enterprise use cases

-

Claude vs. GPT-4.5 vs. Gemini comparison

-

When is Claude the right model for your organization?

What is Claude AI?

Claude AI is a family of advanced large language models (LLMs) developed by Anthropic (a U.S.-based AI research company founded by former OpenAI researchers), designed for safe, reliable, and enterprise-grade AI applications.

It is built to handle complex reasoning tasks, long documents, and compliance-sensitive workflows, making it especially suitable for legal, healthcare, financial, and SaaS environments.

Claude is named after Claude Shannon, the father of information theory, reflecting its foundation in advanced computational reasoning. In 2026, Claude is widely used for:

-

Contract and policy analysis

-

Research automation

-

Internal enterprise AI assistants

-

Knowledge base querying

-

Compliance-focused content generation

Available Claude Models in 2026

Anthropic offers multiple Claude models optimized for speed, cost, reasoning depth, and enterprise deployment. Here’s a clear breakdown to help you choose the right model.

1. Claude 3 Haiku: Fast, Lightweight & Cost-Optimized

Claude 3 Haiku is designed for high-speed, high-volume AI tasks where latency and cost matter most.

Best For:

- Customer support chatbots

- FAQ automation

- Text classification

- High-volume SaaS AI features

Why CTOs Choose Haiku: It delivers stable performance at minimal cost, making it ideal for production environments where speed and affordability matter more than deep reasoning.

2. Claude 3.5 Sonnet: Balanced Performance for Enterprise AI (Most Adopted in 2026)

Claude 3.5 Sonnet is the most widely adopted Claude model in 2026 across startups and mid-to-large enterprises. It offers a balance between cost, reasoning, and reliability.

Best For:

- AI copilots

- Internal knowledge assistants

- SaaS product integrations

- Compliance-aware content generation

Key Improvements in 2026:

- Stronger reasoning benchmarks

- Enhanced coding and debugging support

- Better instruction adherence

- Optimized inference performance

Why CTOs prefer Sonnet: It delivers near-flagship intelligence at significantly lower cost than Opus, making it ideal for production-scale AI deployment.

3. Claude 3 Opus: Advanced Reasoning & Document Intelligence

Claude 3 Opus is the flagship reasoning model designed for complex enterprise workflows that require deep analysis and long-context understanding.

Read More: What Are LLMs? Benefits, Use Cases, & Top Models in 2026

Best For:

- Contract analysis

- Multi-step reasoning tasks

- Financial modeling support

- 100+ page document processing

- Research synthesis

Key Capabilities:

- Context window up to 200K tokens

- Strong logical deduction

- Multi-document comparison

- High-accuracy long-form reasoning

Why Enterprises Use Opus: It is built for high-stakes environments where document intelligence, precision, and structured reasoning are critical.

4. Claude Opus 4.6: Enterprise-Grade Advanced Intelligence (Latest 2026 Upgrade)

Claude Opus 4.6 represents the latest evolution of Anthropic’s flagship model in 2026, designed for large-scale enterprise AI systems and advanced AI agent architectures.

It builds on Opus with improved reasoning depth, better tool orchestration, and enhanced stability in long workflows.

Best For:

- Enterprise AI agents

- Multi-step automation workflows

- Cross-document legal analysis

- Advanced financial forecasting

- Strategic research and planning systems

Key Improvements in 2026:

- Improved multi-step reasoning consistency

- Stronger performance in complex planning tasks

- Enhanced tool-use reliability

- Better long-context coherence

Why Large Enterprises Prefer Opus 4.6: It provides the highest level of reasoning reliability and workflow stability, making it suitable for mission-critical AI deployments and large-scale automation systems.

Quick Model Selection Guide

-

For speed and cost-efficiency: Choose Haiku

-

For balanced enterprise deployment: Choose Sonnet

-

For deep document reasoning: Choose Opus

-

For advanced AI agents and mission-critical systems: Choose Opus 4.6

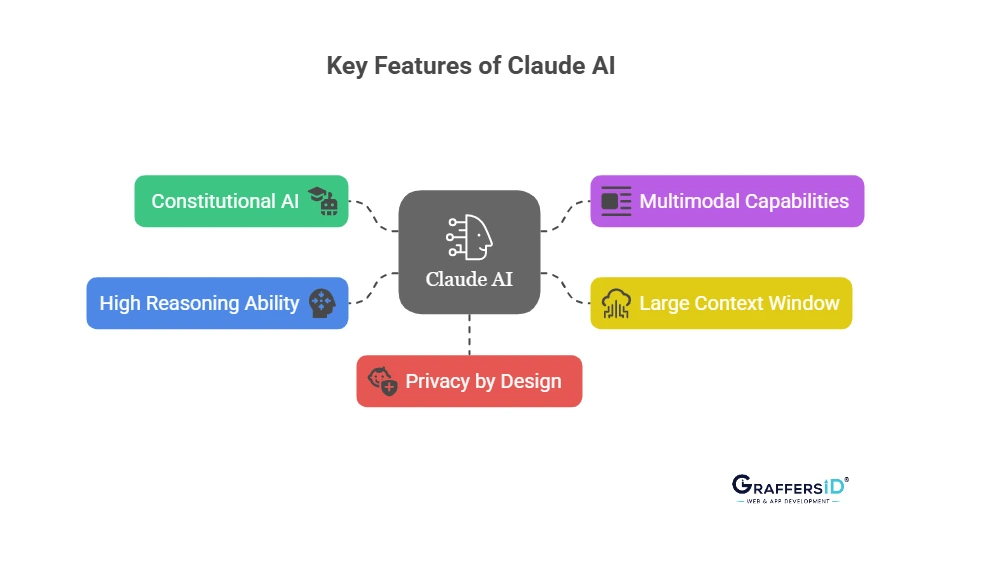

Key Features of Claude AI in 2026

Below are the core features of Claude AI that differentiate it from other large language models:

1. Constitutional AI (Anthropic’s Core Advantage)

Claude is trained using Anthropic’s Constitutional AI framework, an ethical principles-based alignment method that goes beyond traditional reinforcement from human feedback (RLHF). Why it matters for businesses:

- Safer, more aligned outputs: Claude responds in accordance with ethical principles, minimizing harmful or biased outcomes.

- Consistent tone and language across applications: More reliable and consistent outputs are generated, which is particularly significant in legal, medical, and educational contexts.

- Reduced hallucinations and harmful content: By acting as a filter, the constitutional training framework reduces the chances that Claude would produce inaccurate or deceptive information.

2. Large Context Window (Up to 200K Tokens)

Claude Opus supports up to 200K tokens, significantly more than most other models, and thus can process extremely long inputs in a single prompt, including contracts, reports, and multi-file knowledge bases. This enables it to:

- Summarize entire books or reports: Unlike traditional models, Claude can process massive documents in a single pass.

- Hold long, uninterrupted conversations: This is essential for interactive apps and customer service.

- Perform document-to-document comparisons: For tasks such as combining data insights, comparing contract versions, or conducting legal analysis.

3. Multimodal Capabilities (Text + Image Understanding)

Claude Opus models support image input along with text, enabling document and visual data interpretation. This allows users to:

- Analyze visual content in documents: Claude understands and provides insights about graphs, diagrams, and tables embedded in documents.

- Perform screenshot-based Q&A: Product teams can use it to interpret dashboards or UI mockups.

- Generate visual explanations: Can interpret an image and describe its information in normal language, hence increasing accessibility.

Read More: What is Multimodal AI in 2026? Definition, Examples, Benefits & Real-World Applications

4. Advanced Reasoning & Multi-Step Planning

Claude performs strongly in structured reasoning tasks that require logic and sequential thinking. It is particularly effective for:

- Logical deductions: Able to comprehend causal reasoning, if-then logic, and abstract relationships.

- Chain-of-thought prompting: Responds promptly to tasks that require logical thinking, such as deconstructing arguments or resolving math issues.

- Multi-step reasoning tasks: Effective in planning, simulation-based problems, and budgeting projections that involve relating multiple ideas.

5. Enterprise-Grade Privacy & Governance Controls

Claude is designed for secure, API-based deployment with enterprise data protections. It does not keep user conversations by default and is thus appropriate for companies in:

- Healthcare (HIPAA-sensitive): Secure to use with personal health information under compliance laws.

- Legal (confidential case data): Can process case files and contracts while making sure that sensitive data is not stored.

- Finance (PII-sensitive data): Helps analyze financial papers while safeguarding Personally Identifiable Information (PII).

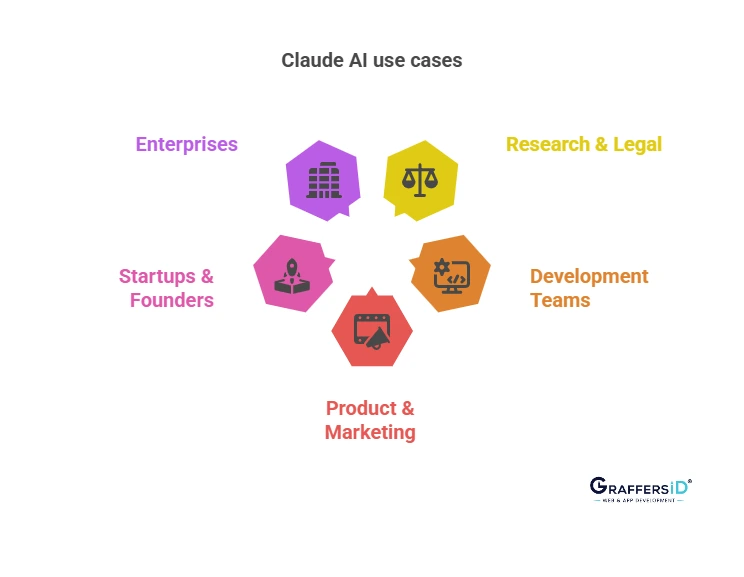

Real-World Use Cases of Claude AI for Tech Teams

Claude AI is widely adopted across enterprises that require document intelligence, structured reasoning, and secure AI automation. Below are the most common real-world use cases in 2026.

1. Legal & Compliance Teams

Claude is highly effective for contract-heavy and regulation-driven industries where accuracy and long-context understanding are critical. It helps teams:

- Summarize 100+ page contracts in minutes

- Extract clauses, obligations, and key dates

- Identify compliance risks and policy gaps

- Compare multiple document versions side-by-side

2. Software Development Teams

Claude supports engineering workflows by improving documentation, code clarity, and review processes. It is used for:

- Explaining legacy or complex codebases

- Generating structured technical documentation

- Reviewing pull requests (PRs)

- Suggesting refactoring and logic improvements

3. Product, Research, & Strategy Teams

Product leaders use Claude to synthesize large volumes of qualitative and market data into actionable insights. Common applications include:

- Market research summarization

- Competitive intelligence analysis

- Customer feedback clustering and trend detection

- Internal knowledge base and wiki automation

4. Enterprise AI Agents & Automation

In 2026, Claude powers enterprise AI agents integrated into internal systems and workflows. It is commonly deployed as:

- Slack-based internal assistants

- Knowledge retrieval bots connected to company databases

- Meeting transcript summarization systems

- Automated reporting and workflow orchestration tools

Claude AI Pricing in 2026: Cost Per Model, Tokens & Enterprise Plans

Understanding Claude AI pricing in 2026 is essential for CTOs and product leaders planning large-scale AI deployment. Pricing varies based on model capability, token usage, and enterprise contracts.

1. Pricing by Model Type (Haiku vs. Sonnet vs Opus)

Claude’s pricing structure is tiered based on model intelligence and compute requirements. Higher-tier models cost more due to increased compute power and reasoning depth.

-

Claude 3 Haiku: Lowest cost, optimized for speed and high-volume usage.

-

Claude 3.5 Sonnet: Mid-tier pricing with balanced reasoning and scalability.

-

Claude 3 Opus/Opus 4.6: Premium tier designed for advanced reasoning and enterprise-grade workloads.

Read More: Claude vs. Gemini: Which LLM is Better for IT Workflows Like Coding and Automation in 2026?

2. Token-Based Pricing (Input + Output Tokens)

Claude API pricing is calculated based on:

-

Input tokens (text you send to the model)

-

Output tokens (text generated by the model)

Longer prompts, large document processing, and high-context workflows increase total token usage. For enterprises using 100K–200K token contexts, cost planning is critical.

3. Usage Volume & API Scaling

Pricing also depends on total API consumption.

- Low-volume startups pay standard per-token pricing.

- High-volume SaaS platforms negotiate better rates.

- AI-native products often require optimized cost-per-request modeling.

Infrastructure architecture plays a major role in cost control.

4. Enterprise Pricing & Custom Contracts

Large enterprises typically enter custom agreements with Anthropic based on:

-

Annual token commitments

-

Dedicated usage tiers

-

Security and compliance requirements

-

Support and SLA agreements

Enterprise contracts often reduce per-token cost while providing predictable billing and governance controls.

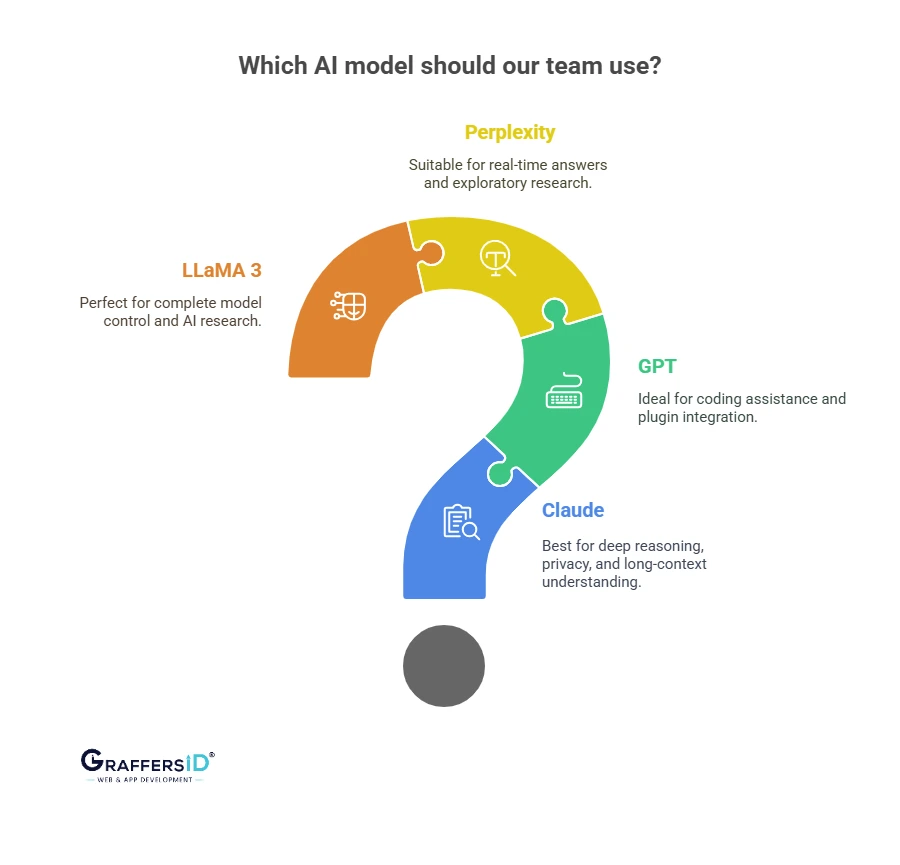

Claude AI 4.5 vs. GPT-5.2 vs. Perplexity vs. LLaMA 4: Comparison

Below is the comparison table of four of the most popular AI models in 2026:

| Feature | Claude AI 4.5 | GPT-5.2 | Perplexity | LLaMA 4 |

|---|---|---|---|---|

| Developer | Anthropic | OpenAI | Perplexity AI | Meta |

| Model Type | Closed LLM | Closed LLM | AI Search + LLM | Open-weight LLM |

| Context Window | Up to 200K | 128K+ | Dynamic (web-based) | 32K–128K+ (varies) |

| Multimodal | Text + Image | Text + Image + Tools | Text + Web | Text (limited multimodal variants) |

| Real-Time Web Access | No (API-based) | Tool-enabled | Native Web Search | No |

| Best For | Enterprise reasoning & compliance | Coding, automation, tool use | Live research & sourced answers | Self-hosted AI & customization |

| Privacy Control | Enterprise API controls | Enterprise tiers available | Query-based | Full self-hosting |

| Open Source | No | No | No | Yes (open weights) |

| Ideal Users | Legal, FinTech, SaaS enterprises | Developers & product teams | Researchers & analysts | AI teams & infra builders |

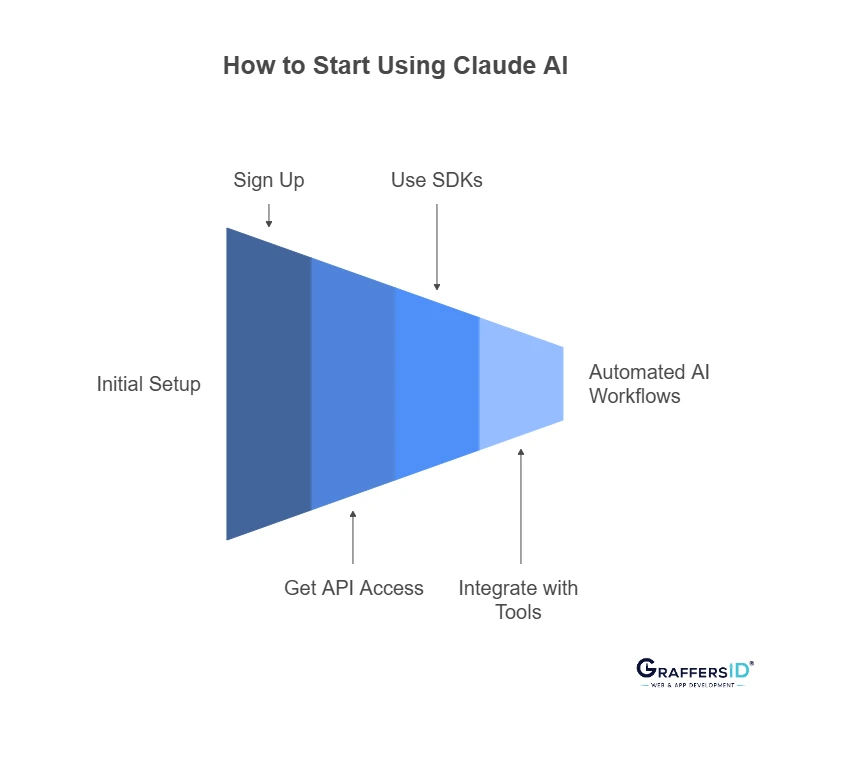

How to Use Claude AI in 2026? Step-by-Step Guide

If you’re evaluating Claude AI for your product, SaaS platform, or enterprise workflow, here is a clear step-by-step process to get started in 2026.

Step 1: Create a Claude Account (Web Access for Testing)

Start by signing up at Claude.ai to access the web-based interface. This allows you to:

- Test prompts and use cases

- Evaluate reasoning quality

- Analyze document handling capabilities

- Compare model versions (Haiku, Sonnet, Opus)

Step 2: Get API Access from Anthropic Console

For product integration, you’ll need API access. Through the Anthropic Developer Console, you can:

- Generate secure API keys

- Choose your preferred Claude model

- Monitor token usage and billing

- Configure rate limits

This is required for embedding Claude into SaaS applications, AI agents, or enterprise tools.

Step 3: Integrate Claude Using Official SDKs

Claude provides SDK support for modern development environments. Supported languages:

- Python

- JavaScript/Node.js

Claude AI Works Well With AI Frameworks:

- LangChain (AI agent orchestration)

- LlamaIndex (document indexing & RAG systems)

- Haystack (enterprise search pipelines)

These frameworks allow you to build retrieval-augmented generation (RAG), AI copilots, and multi-step AI workflows.

Step 4: Build AI Agents & Automate Workflows

Once integrated, Claude can power internal automation and AI agents. Common integrations include:

- Slack: Internal AI assistant

- Notion: Document summarization & tagging

- Zapier: Automated responses & reporting

- N8N: Workflow automation pipelines

This step transforms Claude from a chatbot into an enterprise automation engine.

Step 5: (Optional) Deploy Enterprise-Scale AI Architecture

For production deployment in 2026, organizations typically:

- Implement RAG (Retrieval-Augmented Generation)

- Connect Claude to internal knowledge bases

- Set up monitoring and governance layers

- Optimize token usage for cost efficiency

This ensures scalable, compliant, and high-performance AI operations.

When Should You Use Claude AI? (Best Use Cases in 2026)

Choosing the right large language model depends on your business goals, risk tolerance, and technical requirements. Claude AI is particularly well-suited for the following scenarios:

1. If You Need to Process Long Documents

Claude is ideal if your workflows involve contracts, research papers, policy documents, or large knowledge bases. With a context window of up to 200K tokens, it can analyze, compare, and summarize long-form content in a single prompt, reducing fragmentation and manual review effort.

2. If Compliance and Safety Are Critical

If your organization operates in regulated industries like healthcare, fintech, or legal services, Claude’s Constitutional AI framework offers more controlled and aligned outputs. This makes it suitable for compliance-sensitive environments where consistency and risk mitigation matter.

3. If You Require Structured Reasoning

Claude performs well in multi-step reasoning tasks such as financial modeling support, document comparison, risk analysis, and policy interpretation. It is designed for logic-heavy applications where clarity and structured thinking are more important than creative output.

4. If You Are Building Enterprise AI Agents

Claude is a strong choice for powering internal AI assistants, knowledge retrieval systems, and workflow automation agents. Its long-context understanding and stability make it effective for enterprise-scale AI deployments that require reliability over time.

Conclusion: Is Claude AI the Right Enterprise LLM in 2026?

Claude AI has evolved into one of the most enterprise-ready large language models available in 2026. In a competitive LLM landscape shaped by GPT, Gemini, and open-weight models like LLaMA, Claude differentiates itself through governance-focused design and stability in complex workflows.

For CTOs, CEOs, and product leaders evaluating AI infrastructure, the decision is no longer about raw model performance alone. It is about long-term scalability, regulatory alignment, operational safety, and predictable deployment at scale. Claude offers a strong balance of intelligence, safety, and enterprise integration flexibility.

At GraffersID, we help startups and enterprises design, develop, and scale AI-powered products using Claude, GPT, Gemini, and open-source LLMs.

Our experienced AI development team ensures your AI solutions are scalable, compliant, and future-ready. Contact GraffersID today and transform your product with enterprise-grade AI.