In 2026, Artificial Intelligence (AI) has shifted from being a buzzword to becoming the core engine of digital transformation. From multimodal generative AI driving smarter search experiences to real-time predictive analytics revolutionizing healthcare, finance, and retail, AI frameworks are the hidden infrastructure powering this growth.

At the bottom of this transformation is TensorFlow, Google’s open-source machine learning framework. While PyTorch, JAX, and other AI frameworks are gaining traction, TensorFlow continues to be the preferred choice for enterprises.

This blog will walk you through:

-

What TensorFlow means in 2026 and how it has evolved.

-

Latest TensorFlow features and updates every CTO and tech leader should know.

-

Real-world enterprise use cases where TensorFlow is making a measurable impact.

-

Adoption challenges and how businesses can overcome them.

What Is TensorFlow in 2026?

In 2026, TensorFlow remains one of the most widely used open-source machine learning frameworks for building and scaling artificial intelligence (AI) applications. Originally developed by Google Brain, TensorFlow has evolved into a production-ready ecosystem that supports the entire AI lifecycle from data preprocessing and model training to deployment, monitoring, and optimization.

Read More: What is Deep Learning in 2026? Key Models, Real-World Use Cases, Challenges & Future Trends

Why is TensorFlow Popular in 2026?

Unlike other frameworks, TensorFlow is built to handle enterprise-scale AI projects while staying flexible for startups and research teams. Here’s what makes it unique today:

-

Versatility Across Platforms: TensorFlow runs smoothly on cloud, on-premise infrastructure, mobile apps, web apps, and even embedded edge devices, making it adaptable for multiple industries.

-

Enterprise-Grade Production Readiness: With built-in support for model serving, scaling, performance monitoring, and security compliance, TensorFlow is designed for real-world deployment, not just experimentation.

-

Rich AI Ecosystem: Its ecosystem includes TensorFlow Hub (pre-trained models), TensorFlow Probability (probabilistic modeling), TensorBoard (visualization), and TensorFlow Extended (TFX) for managing end-to-end ML pipelines.

-

Cross-Language Compatibility: While Python remains the most popular language, TensorFlow also supports C++, JavaScript, Java, and Swift, enabling smooth adoption across diverse technology stacks.

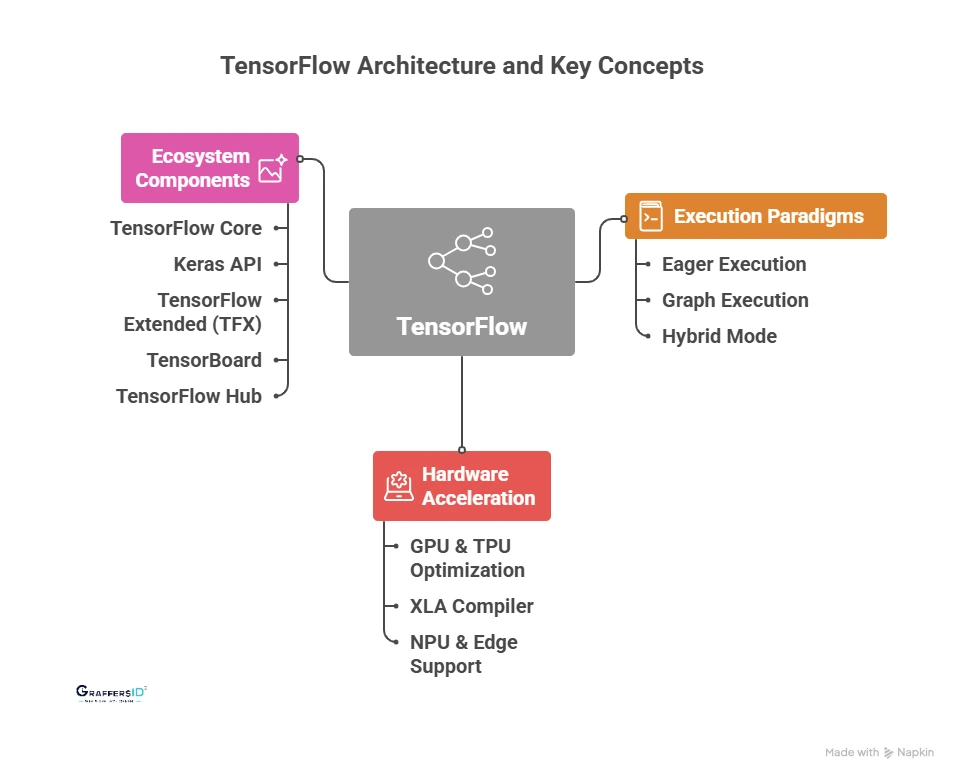

Architecture of TensorFlow & Key Concepts in 2026

Understanding the architecture of TensorFlow is essential for enterprises and developers who want to maximize its power for real-world AI solutions. Let’s break down its core components:

1. Execution Paradigms

TensorFlow offers multiple execution modes, giving developers flexibility depending on project needs:

-

Eager Execution: Runs operations immediately, making development intuitive and debugging faster, ideal for research and experimentation.

-

Graph Execution (via AutoGraph): Transforms Python code into optimized computational graphs for scalable, production-ready AI applications.

-

Hybrid Mode: In 2026, TensorFlow allows smooth switching between eager and graph execution, ensuring the best of both speed and flexibility.

2. Hardware Acceleration

TensorFlow is designed for performance at scale, leveraging modern hardware efficiently:

-

GPU & TPU Optimization: Continues to lead in distributed GPU/TPU training for deep learning, delivering high throughput and lower training costs.

-

XLA Compiler: Provides automated graph optimization, reduces memory usage, and accelerates execution for production AI pipelines.

-

NPU & Edge Support: With the rising adoption of AI on edge devices and mobile chips, TensorFlow supports Neural Processing Units (NPUs) to enable real-time inference on lightweight hardware.

3. Ecosystem Components

TensorFlow’s ecosystem remains one of the most comprehensive in 2026, making it a go-to choice for enterprises:

-

TensorFlow Core: The foundation for building custom machine learning and deep learning models.

-

Keras API: A high-level interface that accelerates AI prototyping and model development.

-

TensorFlow Extended (TFX): Provides a full production pipeline, from data preprocessing to deployment and monitoring.

-

TensorBoard: Industry-standard tool for model visualization, debugging, and performance tracking.

-

TensorFlow Hub: A repository of pre-trained models, enabling businesses to experiment and deploy faster without starting from scratch.

Key Features of TensorFlow in 2026

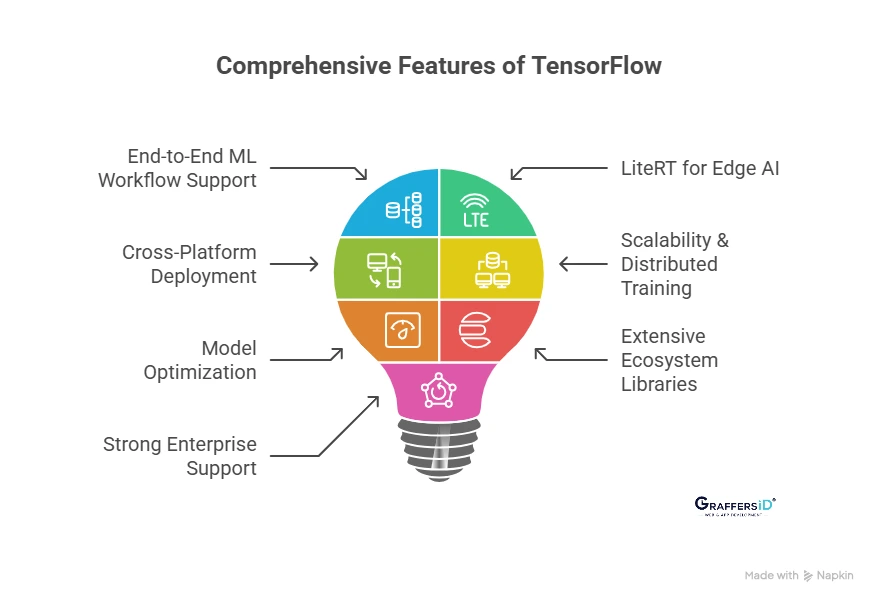

Below are the standout features making TensorFlow a top choice in 2026:

1. End-to-End ML Workflow Support: TensorFlow provides a complete machine learning pipeline, from data preprocessing and model training to deployment and monitoring. This ensures enterprises can build, test, and scale AI solutions without relying on multiple disconnected tools.

2. LiteRT for Edge AI: LiteRT, the successor to TensorFlow Lite, allows developers to deploy high-performance models on mobile, IoT, and edge devices. It’s compact, efficient, and optimized for real-time inference, making AI accessible beyond cloud environments.

3. Cross-Platform Deployment: TensorFlow supports desktop, cloud, mobile, IoT, and web applications (via TensorFlow.js). This flexibility enables organizations to develop once and deploy anywhere, saving time while reaching diverse platforms smoothly.

4. Scalability & Distributed Training: With built-in support for GPUs, TPUs, and distributed clusters, TensorFlow can handle large-scale model training across multiple devices. This makes it ideal for enterprises and startups aiming to scale AI workloads efficiently.

5. Model Optimization: TensorFlow offers advanced optimization techniques like quantization, pruning, and mixed-precision training. These features reduce model size, improve performance, and allow faster deployment in production environments, including edge devices.

6. Extensive Ecosystem Libraries: TensorFlow’s ecosystem includes TensorFlow Probability, TensorFlow Recommenders, and Federated Learning APIs, giving developers access to specialized tools for probabilistic modeling, recommendation engines, and privacy-preserving AI.

7. Strong Enterprise Support: Backed by Google, TensorFlow is widely adopted in industry production systems. Enterprises benefit from continuous updates, community contributions, and long-term support, ensuring reliability and future-proof AI solutions.

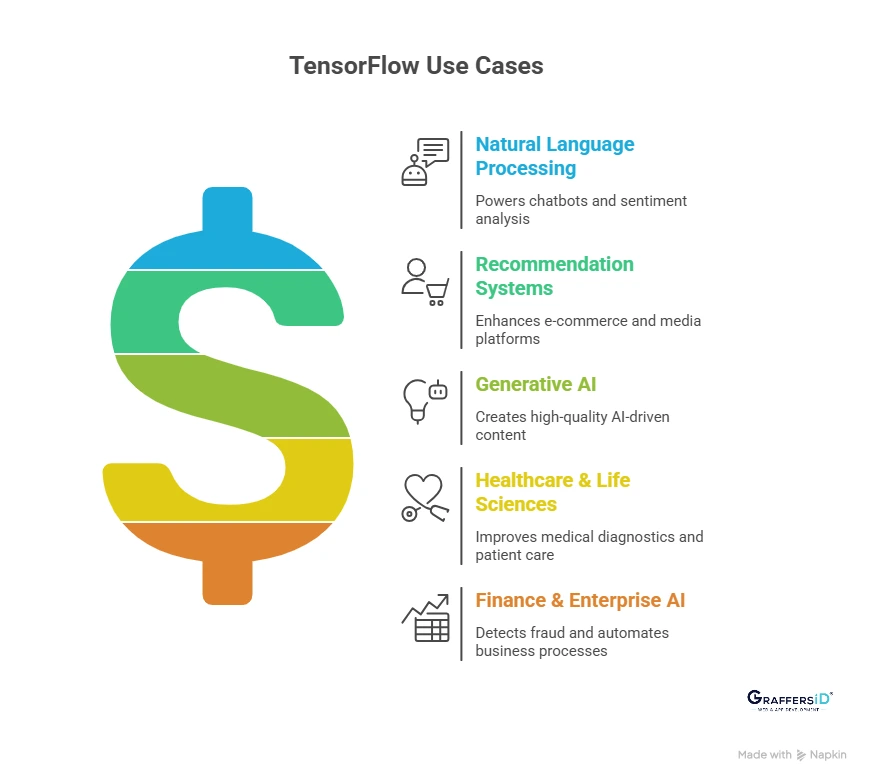

Use Cases of TensorFlow in 2026

TensorFlow in 2026 is powering next-generation AI applications across industries, helping enterprises deploy intelligent solutions at scale. Here’s how businesses are leveraging TensorFlow today:

- Natural Language Processing (NLP): Enterprises leverage TensorFlow for transformer-based NLP models that drive intelligent chatbots, virtual assistants, and multilingual sentiment analysis. These models help businesses deliver personalized, AI-driven communication at scale.

- Recommendation Systems: TensorFlow enables highly accurate recommendation engines for e-commerce and media streaming platforms. By analyzing user behavior and preferences, businesses can provide tailored shopping experiences and content suggestions.

- Generative AI: TensorFlow supports diffusion models and generative AI pipelines for image, video, and content creation. Enterprises use these models in marketing, gaming, and healthcare applications to generate high-quality AI-driven outputs efficiently.

- Healthcare & Life Sciences: From medical imaging analysis to predictive patient monitoring, TensorFlow is a key tool for AI in healthcare. Its models enhance diagnostic accuracy and optimize clinical workflows, improving patient outcomes.

- Finance & Enterprise AI: TensorFlow helps financial institutions detect fraud, model risks, and implement predictive trading strategies. Enterprises also use it to automate business processes and gain actionable insights from large-scale data.

Read More: Advancements in Natural Language Processing (NLP) in 2026: Tools, Trends, and AI Applications

Challenges of TensorFlow Adoption in 2026

While TensorFlow remains a leading AI framework, enterprises often face several challenges when integrating it into their AI projects:

- Steep Learning Curve: TensorFlow’s flexibility and extensive features make it powerful but complex. Enterprises new to machine learning often face a steep learning curve when trying to implement production-ready AI pipelines.

- Migration Issues: As TensorFlow evolves, components like TFLite are being replaced by LiteRT. Migrating existing models to the latest framework can be challenging and requires careful planning to avoid disruptions.

- Performance Tuning: Optimizing TensorFlow models for GPUs, TPUs, or edge devices demands advanced knowledge. Without proper tuning, models may underperform, increasing training times and operational costs.

- Debugging Complex Models: Hybrid execution modes combining eager and graph operations can make debugging difficult. Identifying performance bottlenecks or unexpected behavior in large-scale models often requires expert intervention.

- Competition from PyTorch & JAX: While TensorFlow excels in enterprise deployment, some teams prefer PyTorch or JAX for research or rapid prototyping. Choosing the right framework can be a strategic challenge for decision-makers.

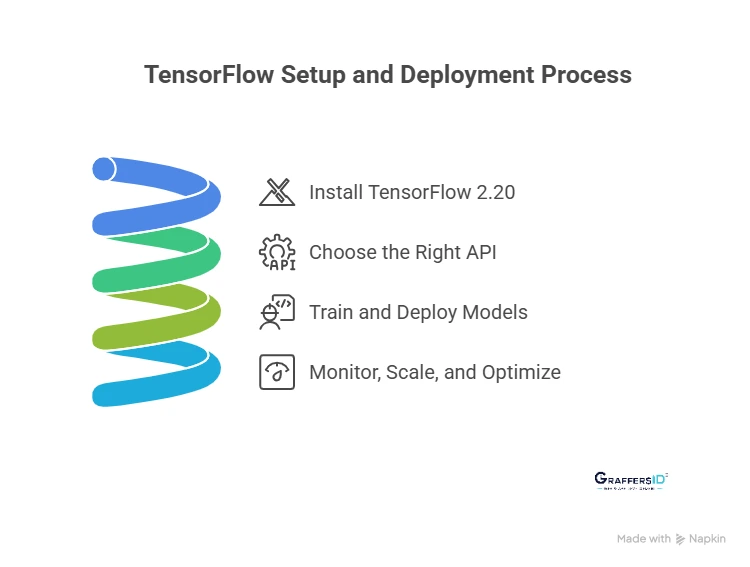

How to Get Started with TensorFlow in 2026

Getting started with TensorFlow 2.20 in 2026 is easier than ever, but to fully leverage its latest features, LiteRT deployment, and optimized pipelines, it’s important to follow a structured approach. Here’s a step-by-step guide to adopting TensorFlow for production-ready AI solutions.

Step 1: Install TensorFlow 2.20

Set up the latest TensorFlow 2.20 with Python 3.12 and NumPy 2.0 to ensure full compatibility and leverage the newest performance enhancements.

Step 2: Choose the Right API

Start with Keras for rapid prototyping or use TensorFlow Core for building custom, high-performance models tailored to your enterprise needs.

Step 3: Train and Deploy Models

Train efficiently using GPUs or TPUs, then deploy smoothly with LiteRT for edge devices or TensorFlow Serving for large-scale enterprise applications.

Step 4: Monitor, Scale, and Optimize

Track model performance with TensorBoard and optimize pipelines using TFX to ensure reliable, scalable, and production-ready AI solutions. Continuously monitor and optimize your models to leverage LiteRT improvements, input pipeline optimizations, and multi-backend Keras support in 2026.

Conclusion

In 2026, TensorFlow has evolved far beyond a traditional machine learning library, it is now a complete, end-to-end AI platform powering enterprise-grade applications across industries. Its scalability, production-ready ecosystem, and versatile deployment options make it the go-to choice for startups and large enterprises alike.

Yet, successfully adopting TensorFlow requires specialized expertise, careful migration planning, and performance optimization, skills that many organizations struggle to maintain in-house. Without the right talent, businesses risk delays, inefficiencies, and missed opportunities in AI-driven innovation.

GraffersID provides skilled AI developers who help companies accelerate AI product development, optimize performance, and deploy solutions that are scalable, reliable, and future-ready.

Ready to build your next AI solution? Hire expert AI developers from GraffersID today and turn your AI vision into reality.