As enterprises accelerate toward AI-powered products, real-time automation, connected IoT ecosystems, and data-intensive applications, computing architecture has moved far beyond an IT concern. In 2026, it is a strategic business decision that directly impacts performance, scalability, security, and customer experience.

Today’s CTOs, CEOs, and product leaders face a critical question:

Should your systems run entirely on cloud computing, leverage edge computing, or combine both in a hybrid model?

The answer is no longer theoretical. With growing demands for:

-

Ultra-low latency experiences

-

Real-time AI inference at scale

-

Stricter data privacy and compliance requirements

-

Cost-efficient, scalable infrastructure

Choosing the right computing model can determine whether a digital product succeeds or struggles to scale.

This guide breaks down the difference between edge computing and cloud computing. You’ll explore real-world use cases, architectural trade-offs, and a practical decision framework designed to help you build future-ready, AI-driven systems that perform reliably in 2026 and beyond.

What is Cloud Computing?

Cloud computing is a technology model where computing resources such as servers, storage, databases, software, and processing power are delivered over the internet instead of being hosted on local machines or physical data centers.

In simple terms, cloud computing allows businesses to access and use IT infrastructure on demand, without owning or maintaining hardware.

Instead of investing in physical servers, organizations use cloud platforms to:

-

Run web and mobile applications

-

Store and manage large volumes of data

-

Scale computing resources instantly based on demand

-

Support AI, automation, and data-driven workloads

This approach makes cloud computing a foundation for modern digital products and AI-powered systems in 2026.

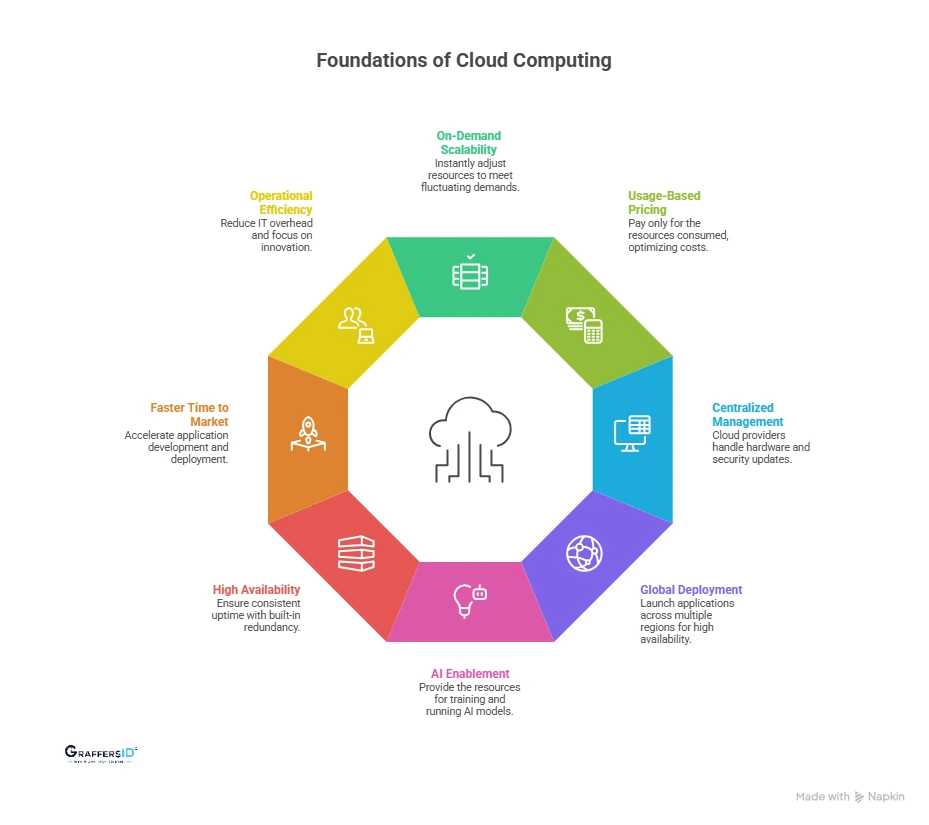

Key Features of Cloud Computing in 2026

-

On-demand scalability: Instantly scale compute, storage, and networking resources up or down based on workload requirements.

-

Usage-based pricing model: Pay only for the resources consumed, helping organizations optimize infrastructure costs.

-

Centralized infrastructure management: Cloud providers manage hardware, updates, security patches, and availability.

-

Global deployment capabilities: Launch applications across multiple regions to ensure high availability and performance.

Business Benefits of Cloud Computing in 2026

-

AI and machine learning enablement: Provide the large-scale compute and storage required for training AI models and running analytics.

-

High availability and reliability: Built-in redundancy and failover mechanisms ensure consistent uptime.

-

Faster time to market: Accelerate application development and deployment using cloud-native services.

-

Operational efficiency: Reduce IT overhead and focus internal teams on innovation rather than infrastructure management.

Types of Cloud Computing Deployment Models in 2026

Organizations use different cloud deployment models based on security, scalability, and compliance needs:

-

Public Cloud: Shared infrastructure managed by providers like AWS, Microsoft Azure, and Google Cloud, ideal for scalable applications.

-

Private Cloud: Dedicated cloud infrastructure offering higher control, security, and compliance.

-

Hybrid Cloud: A combination of on-premise systems and cloud services, enabling flexible workload management.

-

Multi-Cloud: Using multiple cloud providers to improve resilience, performance, and vendor flexibility.

Each model serves different business goals, and many enterprises in 2026 adopt hybrid or multi-cloud strategies for optimal performance and risk management.

What is Edge Computing?

Edge computing is a computing model where data is processed closer to where it is created, instead of being sent to a centralized cloud server.

In practical terms, edge computing runs applications and analytics directly on or near:

-

IoT devices and smart sensors

-

Industrial machines and controllers

-

Mobile phones and wearable devices

-

Local servers or on-premise gateways

By processing data at the “edge” of the network, edge computing reduces the time it takes to analyze and act on data. This minimizes latency, lowers bandwidth consumption, and enables faster decision-making, especially for real-time applications.

Instead of sending every data point to the cloud, only relevant or aggregated data is transmitted, making systems more efficient and cost-effective.

Edge computing is increasingly adopted by enterprises building real-time, AI-driven, and IoT-enabled systems because it enables faster decision-making closer to where data is generated.

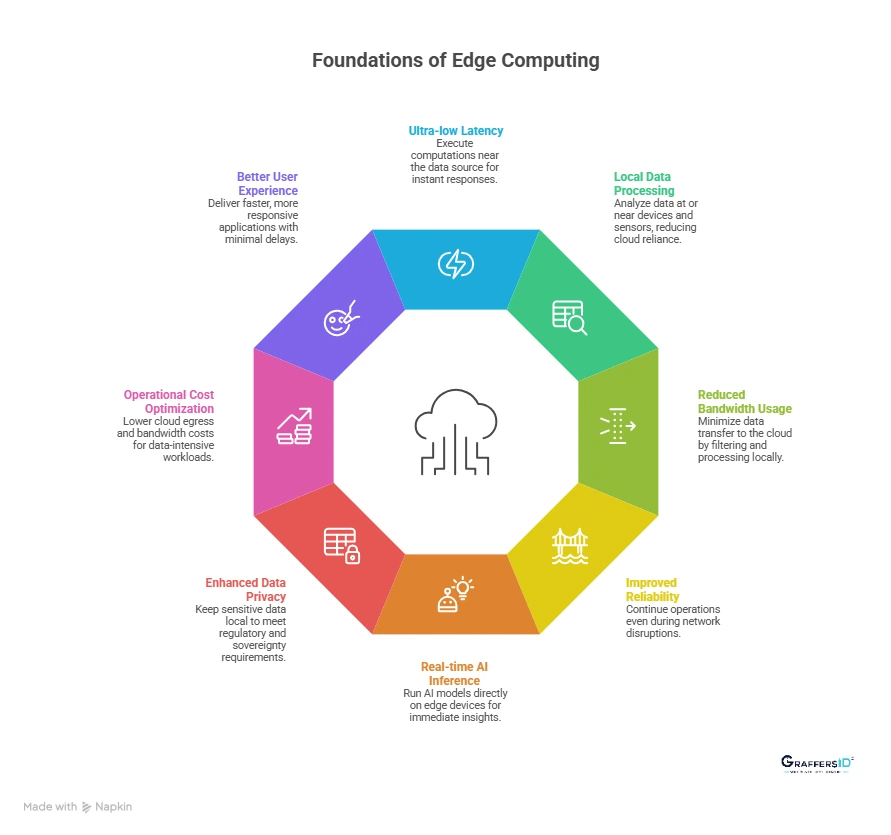

Key Features of Edge Computing in 2026

-

Ultra-low latency processing: Execute computations near the data source to deliver instant responses for time-sensitive applications.

-

Local data processing: Reduce reliance on centralized servers by analyzing data at or near devices and sensors.

-

Reduced bandwidth usage: Minimize data transfer to the cloud by filtering and processing information locally.

-

Improved reliability: Continue operations even during network disruptions or limited connectivity.

Business Benefits of Edge Computing in 2026

-

Real-time AI inference: Run AI models directly on edge devices for immediate insights and actions.

-

Enhanced data privacy and compliance: Keep sensitive data local to meet regulatory and sovereignty requirements.

-

Operational cost optimization: Lower cloud egress and bandwidth costs for data-intensive workloads.

-

Better user experience: Deliver faster, more responsive applications with minimal delays.

Edge Computing vs. Cloud Computing: Key Differences You Should Know in 2026

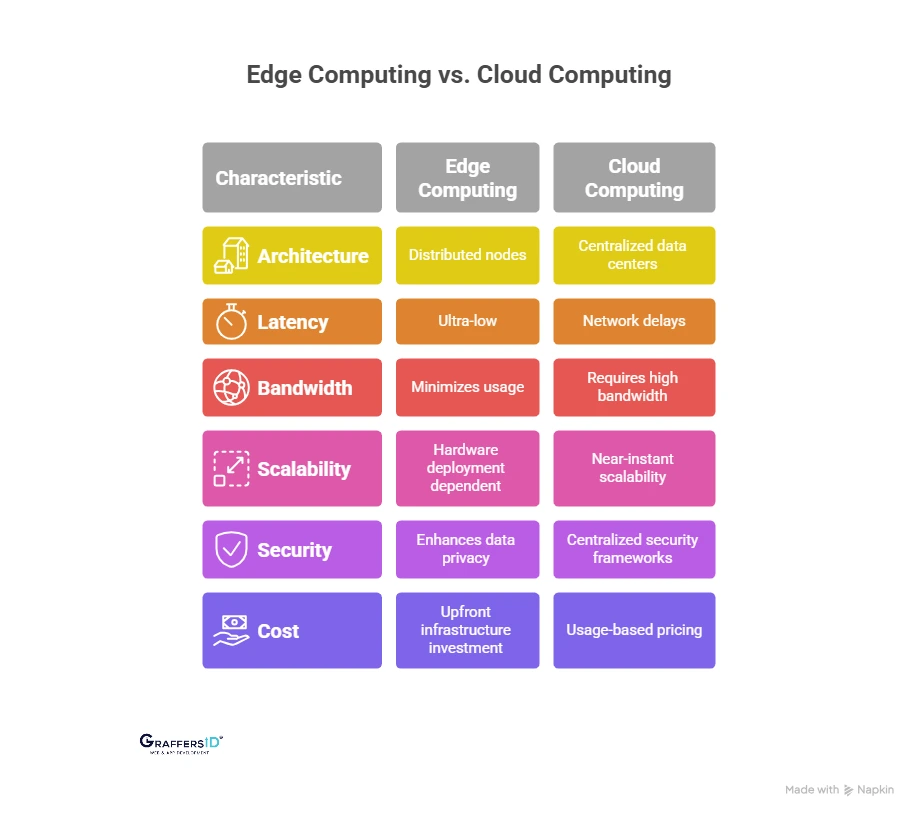

1. Architecture and Data Processing Model

The core difference between cloud computing and edge computing lies in how and where data is processed.

-

Cloud computing relies on centralized data centers where data is sent, processed, and stored remotely.

-

Edge computing uses distributed nodes, such as local servers, gateways, or devices, to process data closer to its source.

Because edge computing reduces the distance data must travel, it delivers faster responses and improves system reliability, especially in real-time environments.

2. Latency and Real-Time Performance

Latency is one of the most critical factors when comparing edge computing vs. cloud computing.

-

Edge computing enables ultra-low latency by processing data locally, often within milliseconds.

-

Cloud computing introduces network delays because data must travel to and from centralized servers.

This makes edge computing the preferred choice for real-time use cases such as autonomous vehicles, industrial automation, AR/VR, and live video analytics in 2026.

3. Bandwidth Usage and Network Dependency

Another key difference between edge and cloud computing is their dependence on network connectivity.

-

Cloud computing requires continuous, high-bandwidth internet access to transmit large volumes of data.

-

Edge computing minimizes bandwidth usage by filtering, analyzing, and processing data locally before sending only relevant information to the cloud.

For data-heavy applications, edge computing significantly reduces bandwidth costs and improves performance.

4. Scalability and Deployment Flexibility

Scalability plays a major role in infrastructure planning for modern enterprises.

-

Cloud computing offers near-instant scalability, allowing businesses to expand resources globally without physical constraints.

-

Edge computing scalability depends on hardware deployment, making expansion more controlled and location-specific.

In practice, most enterprises in 2026 use cloud platforms for scale and edge systems for execution at the local level.

5. Security, Data Privacy, and Compliance

Security and compliance requirements strongly influence the choice between cloud and edge computing.

-

Cloud computing provides centralized security frameworks, identity management, and compliance certifications.

-

Edge computing enhances data privacy by keeping sensitive data closer to its source, reducing exposure during transmission.

This makes edge computing especially valuable for regulated industries such as healthcare, finance, manufacturing, and government services.

6. Cost Structure and Operational Efficiency

The cost models of edge computing and cloud computing differ significantly.

-

Cloud computing follows a usage-based pricing model, where costs scale with storage, compute, and data transfer.

-

Edge computing involves upfront infrastructure investment, including devices, gateways, and maintenance.

In 2026, cost optimization depends on workload type; real-time, data-intensive workloads often benefit from edge processing, while elastic workloads favor the cloud.

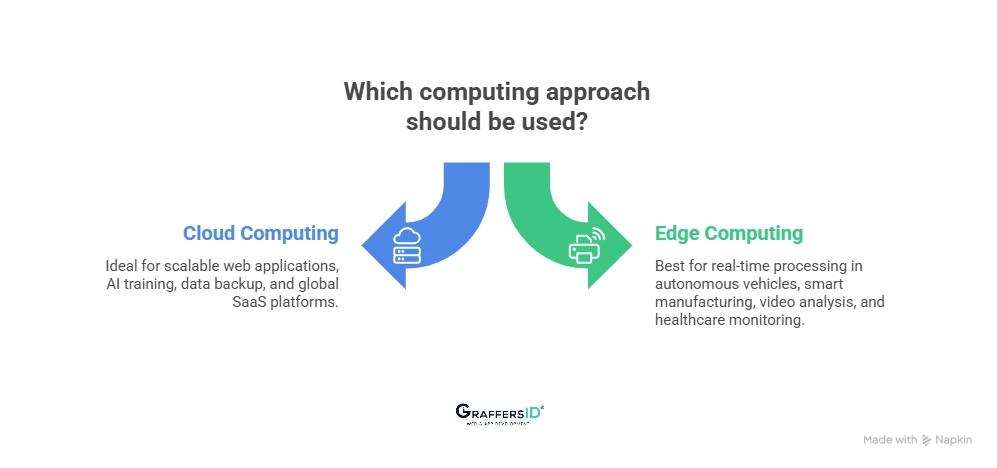

When to Use Cloud Computing: Best Use Cases in 2026

- Enterprise Web and Mobile Applications: Cloud computing is ideal for building scalable web and mobile applications that require high availability, global reach, and continuous performance optimization.

- AI Model Training and Advanced Analytics: Cloud platforms provide the massive compute power and storage needed for training AI models, running data analytics, and supporting large-scale machine learning workflows.

- Data Backup and Disaster Recovery: Cloud computing enables secure data backup, automated recovery, and business continuity without the cost of maintaining physical infrastructure.

- Global SaaS Platforms: Cloud infrastructure supports SaaS products that need elastic scaling, multi-region deployment, and consistent performance for users worldwide.

When to Use Edge Computing: Best Use Cases in 2026

- Autonomous Vehicles and Drones: Edge computing enables real-time decision-making by processing sensor and navigation data locally, without relying on cloud latency.

- Smart Manufacturing and Robotics: Factories use edge computing to monitor equipment, detect anomalies, and automate processes instantly on the production floor.

- Real-Time Video and Image Processing: Edge computing supports applications like facial recognition, surveillance, and AR by analyzing video streams at the source.

- Healthcare Monitoring and Diagnostics: Medical devices use edge computing to process patient data instantly while maintaining privacy and regulatory compliance.

Read More: What Is Artificial Intelligence in 2026? Definition, Types, Benefits & Real-World Use Cases

Edge and Cloud Together: Hybrid Computing Model in Modern Systems

- Real-Time Processing with Centralized Intelligence: Edge systems handle time-sensitive actions, while the cloud manages long-term analytics, orchestration, and AI model updates.

- AI-Powered Platforms at Scale: Hybrid architectures allow businesses to run AI inference at the edge and leverage the cloud for training, monitoring, and optimization.

- Cost-Optimized and Reliable Infrastructure: Combining edge and cloud reduces bandwidth costs, improves uptime, and ensures consistent performance across environments.

Conclusion

There is no universal answer when choosing between edge computing and cloud computing; the right decision depends on how your business uses data, AI, and automation.

-

Choose cloud computing when you need global scalability, advanced analytics, AI model training, and centralized workload management.

-

Choose edge computing when real-time performance, ultra-low latency, and local data processing are critical to your application.

-

Choose a hybrid edge-cloud approach when building AI-driven, data-intensive systems that require both instant execution and centralized intelligence.

In 2026, the ideal computing model depends on your business objectives, data privacy, compliance requirements, performance, and latency expectations.

Making the right decision today has a direct impact onproduct performance, operational efficiency, customer experience, and long-term scalability. As AI adoption accelerates, architectures that balance edge execution with cloud intelligence will define competitive advantage.

At GraffersID, our remote AI developers and engineering teams help businesses build scalable AI-powered solutions.

Contact GraffersID and build smarter, scalable systems for 2026 and beyond.